Our brain – the most exciting computer of all time

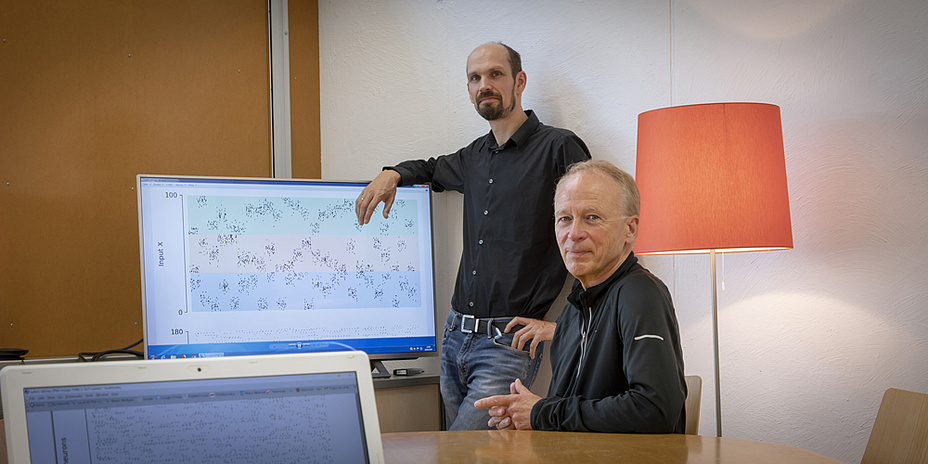

‘Colleagues in neuroscience think that we basically know less today about how the human brain works than we did ten years ago,’ says Wolfgang Maass. In his work, the computer scientist has specialised in finding out how the computer centre in our heads processes information and how this way of working can be applied to computers. The human brain is a complex, extremely efficient and powerful organ for processing information. Since at the same time it manages with about a million times less energy than a supercomputer – despite more or less the same number of computing elements – it has been the object of intense research in industry in the last few years.

Colleagues in neuroscience think that we basically know less today about how the human brain works than we did ten years ago.

Another reason is the discontinuation of Moore’s law: ‘There are intensive discussions on whether today’s most common computer architectures are coming up against the limits of their power,’ explains Maass. ‘Everyone is in agreement, however, that new ways of building computers should be found in order to be able to increase their power even more.’ In 2013 the European Union launched the Human Brain Project – a Europe-wide research alliance whose goal is to promote research relevant to the human brain, establish research infrastructure and use collected knowledge for the construction of new computer architectures. Today the project which was scheduled to last ten years is about halfway through its life. Wolfgang Maass and his team are responsible for the Principles of Brain Computation work package in the Human Brain Project. They set themselves the task of investigating information processing in the brain and of finding ways to use the knowledge obtained in the development of new computer architectures. ‘And that requires a lot of innovation’, explains Maass. ‘The human brain works in a completely different way to all the circuits which are familiar to us.’

The Human Brain Project is working on decoding the human brain.

Brain versus computer

Today’s computer systems work on the Turing principle: a universal but fixed computing principle by which all possible applications can be processed. ‘That was what was revolutionary about Turing’s system: you didn’t need a new computer for each application, but rather you had a system which is universally applicable,’ explains Maass. Our brains, on the contrary, work entirely differently: ‘In contrast to computers, the brain works for instance not with bits – in other words, abstract units of information – but rather with spikes – short voltage increases as spatio-temporal events – in order to code and process information,’ explains Maass. ‘But the spikes of the various neurons don’t have the same “weight” in this processing. You can imagine it a bit like interpersonal communication. We take an opinion from a group of persons more seriously than from one person.’

Brain-inspired computing

The team led by the scientist is currently working on a simulation which reproduces the way of working of the circuits of the brain. ‘You mustn’t forget that evolution is responsible for this complex organ and that it developed without a clear blueprint or on clear principles,’ says Maass. For this reason the team is working on producing powerful neural networks through an evolution which is simulated in the computer. Deep learning methods are very useful for this, and they have been reprogrammed for this task. The findings obtained from the analysis of the produced networks are fascinating: ‘Information can be processed much more efficiently and robustly by using spikes than by all the known networks up to now.

The team of the Institute of Theoretical Computer Science.

Synaptic plasticity and learning

Robert Legenstein is also conducting research on neuronal processes at the Institute for Theoretical Computer Science (IGI). One particular focus of his work is on synaptic plasticity and its role in learning processes. Synapses are communication channels in the brain across which neurons exchange information by means of spikes. A change in the properties of these channels is termed synaptic plasticity. ‘One surprising realisation is that the brain is constantly changing even when a person is not learning. Connections between neurons emerge and then disappear again. The brain is constantly in motion. This is quite different to traditional computers where the circuits have been laid down right from the beginning and are fixed,’ adds the scientist.

The brain is constantly in motion. This is quite different to traditional computers where the circuits have been laid down right from the beginning and are fixed.

‘Our brain does not work in rigidly defined paths, but rather the neuronal connective structure is constantly fluctuating – and these fluctuations only become stronger when you’re learning something.’ A possible explanation for this permanent change is the striving towards a structure which is optimally adapted to the life circumstances of the organism. Simply put: unnecessary connections are removed, new ones are accidentally introduced and reinforced if they prove useful. The researchers reproduce the fluctuations of the connections in the brain in mathematical models and simulations. ‘Our simulations and theoretical analyses confirm that this principle actually implements a deliberate search for optimum connective structures. These findings can also be used in the area of deep learning. From this, the team surrounding Legenstein developed a learning algorithm for artificial neural networks which gets by with up to 50 times fewer connections than normal. By saving on connections the training is faster, more efficient and requires less memory and energy.

The model of connecting fluctuations in the brain has been described in a scientific paper recently published in a eNeuro. The full paper can be viewed on the eNeuro-website.

How the learning algorithm works was recently presented at the International Conference on Learning Representations (ICLR). The relevant paper can be found on the conferences website.

The algorithm is currently being tried out in cooperation with TU Dresden on the Spinnaker 2 chip they have developed. An artificial neural network that had been trained to recognise handwritten figures was implemented on the chip. By means of the learning algorithm, the memory requirements during training were able to be reduced by a factor of 30. If you compare the power consumption of the Spinnaker 2 chip with a chip inside a traditional computer, the advantage of this system becomes evident. Whereas the computer requires 20 watts to train the network, the Spinnaker 2 chip manages with just 88 milliwatts using the learning algorithm.

Will we soon have decoded the human brain?

Both researchers answer this question with a very clear response: ‘It will take a very long time.’ The human brain is still one of the most complicated and most fascinating of research areas. And every new research step forward reveals new puzzles the solutions of which also have to be sought.

This research project is attributed to the Field of Expertise „Information, Communication & Computing“, one of TU Graz' five strategic areas of research.

Visit Planet research or more research related news.

Kontakt

Robert LEGENSTEIN

Institute of Theoretical Computer Science

Inffeldgasse 16b/I | 8010 Graz

Phone: +43 316 873 5824

legi@igi.tugraz.at

Wolfgang MAASS

Institute of Theoretical Computer Science

Inffeldgasse 16b/I | 8010 Graz

Phone: +43 316 873 5822

maass@igi.tugraz.at