Full 6-DOF Localization Framework

3D sparse reconstruction of a single room.

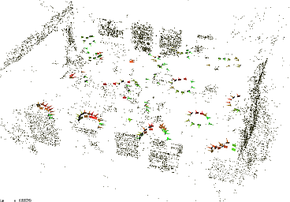

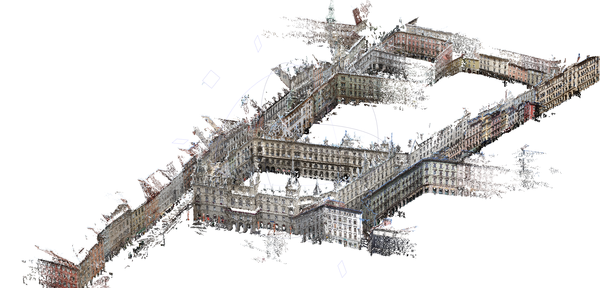

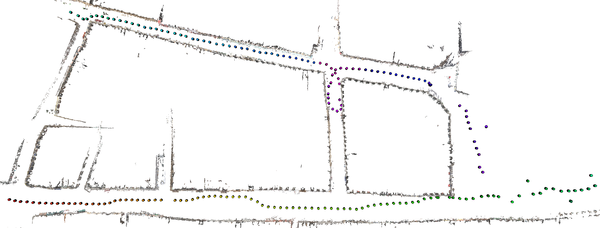

3D sparse reconstruction of the city center of Graz, Austria.

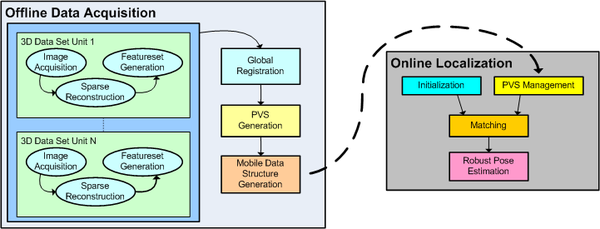

Given an approximate position of a mobile phone (by GPS coordinates or WIFI triangulation), the server sends the necessary data content to the client on request. The mobile phone application is provided with the necessary data for instant calculation of a full 6DOF pose given the actual input image from the mobile phone camera.Localization from Ordinary Images (2009)

The features from the 2D input image are matched against the dataset of fully globally registered 3D points, and a robust 3-point pose algorithm is used to robustly find the correct pose.

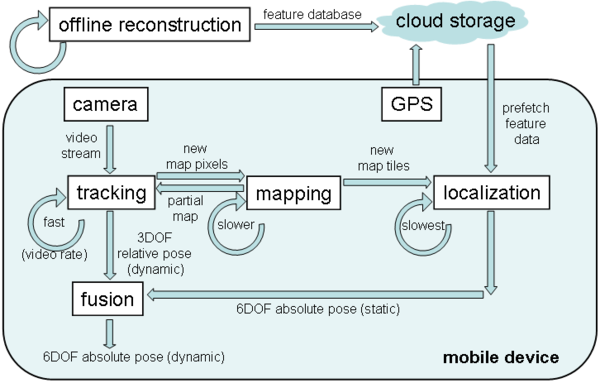

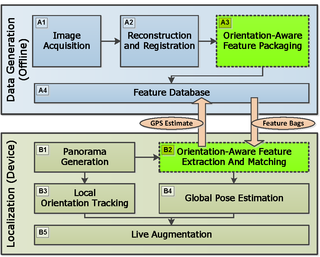

Workflow during the offline and the online stage of our framework. In the offline stage sparse feature models are calculated, which are then partitioned into individual blocks considering visibility contraints. The mobile data structure is created and transfered to a mobile client on request. The mobile client can then robustly calculate a full 6DOF pose.

Due to the limited field of view of the mobile phone camera, attaching an additional wide angle lens to the camera is advisable. Especially in indoor environments a large field of view is essential for a good performance of the approach.

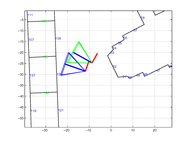

Color-coded path through three reconstructed rooms in our department, starting in the right room (red) walking through the middle room (pink) into the right room (cyan) and back into the right room (green).

Localization from Panoramic Images (2011)

To overcome the narrow field of view of standard cameras, in earlier work we used a wide angle lens attached to the mobile phone. In this work we use a special algorithm for generating panoramic images in real-time (see Figure below). The details about the mapping and tracking algorithm can be found here.

A typical panoramic image shot from a mobile phone without any exposure change compensation.

While the panoramic image is created, the features are matched against the database in the background, followed by a robust pose estimation step. The flowchart of the system is depicted in the Figure below. A part of the reconstruction is retrieved from the server given an approximate GPS position. The feature extraction, feature matching and pose estimation steps are integrated as background tasks while new pixels are mapped into the panoramic image from the live video feed. As soon as the localization succeeds, a full 6-DOF pose is obtained.

Flowchart of the localization system.

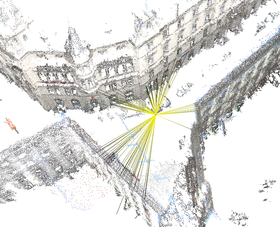

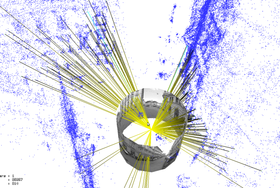

The result of the localization procedure is shown in the Figure below. The inliers are spread around the entire 360-degree panorama. The rays from the camera center directly hit the corresponding 3D points in the environment, passing through their projection in the image (i.e. the textured cylinder).

Localization result for a full 360-degree panoramic image. The yellow lines indicate the inliers used for establishing the pose estimate.

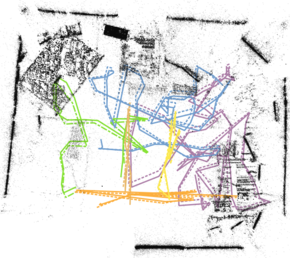

A path through the city center of Graz, Austria is shown in the Figure below. Around 230 full panoramic images were acquired using an omnidirectional PointGrey Ladybug camera. A full noise-free 360-degree panorama is the ideal case for our localization procedure obviously. The localization also works with distorted and noisy images, however. We conclude that an increase of the field of view directly infers an increasing success rate and also an improved accuracy of the localization results.

Color-coded path through the city center of Graz, Austria. The path starts in the lower left area of the image and ends in the upper left area. Since parts of the reconstruction from the square in the right area is missing, the localization fails in this area. As soon as enough environmental area is available as reconstruction again, the localization approach succeeds again.

A video describing our approach in short can be found here.Localization from Panoramic Images using Sensors (2012)

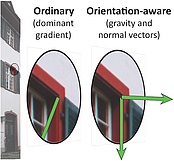

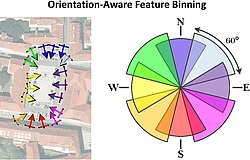

We used our system presented at ISMAR 2011 and added sensor support for feature matching during localization. In the offline stage we additionally consider the upright direction (i.e. gravity) and the feature normal direction with respect to north to partition the features into separate bins.

Flowchart of the new system with the new blocks marked in green.

During online operation the sensors are used to slice the panoramic image into tiles which correspond to different geographic orientations. The features in the image slice corresponding to a specific geographic orientation are matched against the previously binned features from the reconstruction.

From left to right: considering gravity for orientation assignment, orientation-aware feature binning and orientaion-aware matching during online operation.

Below some snapshots from the video we recorded live on the iPhone 4S. The full video can be found here.

Snapshots from our submission video.

Localization from GPS-Tagged Images without Reconstruction (2012)

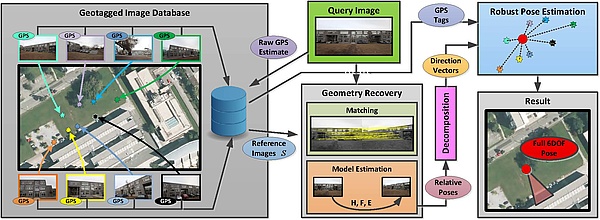

Inspired by the MS Read-Write idea we investigated the idea to perform 6DOF self-localization without the need for a full 3D reconstruction. The idea was investigated earlier by Zhang and Kosecka in 2006. We revisit this idea with a more close evaluation using a Differential-GPS setup and the use of homographies and epipolar geometry.

Localization approach avoiding a full 3D reconstruction.

The results encourage further investigation of this idea, since it opens a completely new idea of performing localization and is advantageous in terms of database management and several other issues.Geospital Managment and Utilization of Large-Scale Urban Visual Reconstructions

After modifying our reconstruction pipeline, we are now able to record video streams with a backpack system and reconstruct from omnidirectional cameras. By using a postgresql database and extensions for spatial queries, we are able to store and manage full sparse point-cloud reconstructions and visualize them, together with other data such as Flickr or Panoramio images, within current GIS software, such as Quantum GIS for example.

Backpack system for acquiring data with a Ladybug camera and a differential GPS receiver. The system records everything on a Macbook, using an iPhone for receiving corrections for the DGPS sensor.

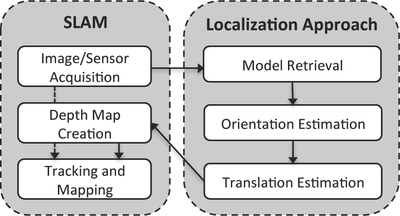

Global Localization from Monocular SLAM on a Mobile Phone (2014)

Our contribution in this work are as follows: 1) A novel system concept for global localization on smartphones, which combines local SLAM and global map registration; 2) A system design which allows for parallel operation of the localization process on the server, while the clientside system is free to continue expanding and refining the map locally; 3) An iterative matching and registration scheme which allows for much of the information needed for localization to be cached on the server; 4) Evaluations of our system, in both indoor and outdoor environments, including timing and positional accuracy measurements. Our evaluations highlight the advantages of SLAM localization over a model-based approach which uses single-image localization on each frame.

On the right side, reconstructions from three squares in Graz, Austria, registered in a globally accurate manner are shown. On the left, a set of video paths is shown in an indoor environment.

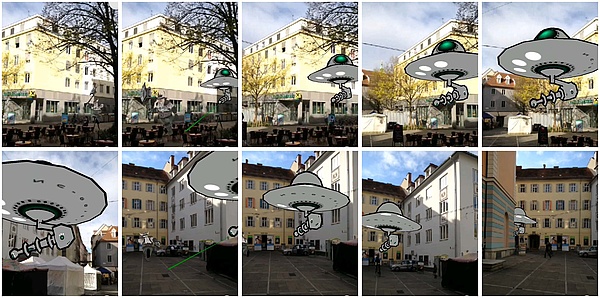

By robustly aligning our SLAM map with the reconstruction, we can create highly accurate annotations in outdoor scenarios.

A half-transparent building model is overlaid on the live camera image.

A video describing our approach can be found here.Instant Outdoor Localization and SLAM Initalization from 2.5D Maps (2015)

In this work we introduce a novel method for instant geolocalization of the video stream captured by a mobile device. The first stage of our method registers the image to an untextured 2.5D map (2D building footprints + approximate building height), providing an accurately and globally aligned pose. This is done by first estimating the absolute camera orientation from straight-line segments, then estimating the camera translation by segmenting the fac¸ades in the input image and matching them with those of the map. The resulting pose is suitable to initialize a SLAM system. The SLAM map is initialized by back-projecting the feature points onto synthetic depth images rendered from the augmented 2.5D map.

Current Project Status

The current systems already contains all parts for reconstruction, registration and alignment. The algorithms can be used to create reconstructions for indoor or outdoor scenarios. At the moment our focus is on creating textured city models, using floor plans from OpenStreetMap for localization and to combine multiple fast approaches to make localization on mobile phones usable in a more straight-forward way.Project Team

Clemens Arth Christian Pirchheim Dieter SchmalstiegAlumni

Manfred Klopschitz Gerhard Reitmayr Jonathan Ventura Arnold Irschara Daniel WagnerPublications

Real-Time Self-Localization from Panormaic Images on Mobile DevicesAuthors: Clemens Arth, Manfred Klopschitz, Gerhard Reitmayr, Dieter Schmalstieg Details: International Symposium on Mixed and Augmented Reality (ISMAR), 26-29 Oct. 2011 Self-localization in large environments is a vital task for accurately registered information visualization in outdoor Augmented Reality (AR) applications. In this work, we present a system for selflocalization on mobile phones using a GPS prior and an onlinegenerated panoramic view of the user's environment. The approach is suitable for executing entirely on current generation mobile devices, such as smartphones. Parallel execution of online incremental panorama generation and accurate 6DOF pose estimation using 3D point reconstructions allows for real-time self-localization and registration in large-scale environments. The power of our approach is demonstrated in several experimental evaluations. |

Towards Wide Area Localization on Mobile PhonesAuthors: Clemes Arth, Daniel Wagner, Manfred Klopschitz, Arnold Irschara, Dieter Schmalstieg Details: International Symposium on Mixed and Augmented Reality (ISMAR), 19-23 Oct. 2009 We present a fast and memory efficient method for localizing a mobile user's 6DOF pose from a single camera image. Our approach registers a view with respect to a sparse 3D point reconstruction. The 3D point dataset is partioned into pieces based on visibility constraints and occlusion culling, making it scalable and efficient to handle. Starting with a coarse guess, our system only considers features that can be seen from the user's position. Our method is resource efficient, usually requiring only a few megabytes of memory, thereby making it feasible to run on low-end devices such as mobile phones. At the same time it is fast enough to give instant results on this device class. |

Exploiting Sensors on Mobile Phones to Improve Wide-Area LocalizationAuthors:Clemes Arth, Alessandro Mulloni, Dieter Schmalstieg Details: International Confernce on Pattern Recognition (ICPR), 11-15 Nov. 2012 In this paper, we discuss how the sensors available in modern smartphones can improve 6-degree-of-freedom (6DOF) localization in wide-area environments. In our research, we focus on phones as a platform for largescale Augmented Reality (AR) applications. Thus, our aim is to estimate the position and orientation of the device accurately and fast - it is unrealistic to assume that users are willing to wait tenths of seconds before they can interact with the application. We propose supplementing vision methods with sensor readings from the compass and accelerometer available in most modern smartphones. We evaluate this approach on a largescale reconstruction of the city center of Graz, Austria. Our results show that our approach improves both accuracy and localization time, in comparison to an existing localization approach based solely on vision. We finally conclude our paper with a real-world validation of the approach on an iPhone 4S. |

Full 6DOF Pose Estimation from Geo-Located ImagesAuthors: Clemens Arth, Gerhard Reitmayr, Dieter Schmalstieg Details: Asian Conference on Computer Vision (ACCV), 5-9 Nov. 2012 Estimating the external calibration - the pose - of a camera with respect to its environment is a fundamental task in Computer Vision (CV). In this paper, we propose a novel method for estimating the unknown 6DOF pose of a camera with known intrinsic parameters from epipolar geometry only. For a set of geo-located reference images, we assume the camera position - but not the orientation - to be known. We estimate epipolar geometry between the image of the query camera and the individual reference images using image features. Epipolar geometry inherently contains information about the relative positioning of the query camera with respect to each of the reference cameras, giving rise to a set of relative pose estimates. Combining the set of pose estimates and the positions of the reference cameras in a robust manner allows us to estimate a full 6DOF pose for the query camera. We evaluate our algorithm on different datasets of real imagery in indoor and outdoor environments. Since our pose estimation method does not rely on an explicit reconstruction of the scene, our approach exposes several significant advantages over existing algorithms from the area of pose estimation. |

Geospatial Managment and Utilization of Large-Scale Urban Visual ReconstructionsAuthors: Clemens Arth, Jonathan Ventura, Dieter Schmalstieg Details: The 4th International Conference on Computing for Geospatial Research & Application (COM.Geo 2013) In this work we describe our approach to efficiently create, handle and organize large-scale Structure-from-Motion reconstructions of urban environments. For acquiring vast amounts of data, we use a Point Grey Ladybug 3 omnidirectional camera and a custom backpack system with a differential GPS sensor. Sparse point cloud reconstructions are generated and aligned with respect to the world in an offline process. Finally, all the data is stored in a geospatial database. We incorporate additional data from multiple crowd-sourced databases, such as maps from OpenStreetMap or images from Flickr or Instagram. We discuss how our system could be used in potential application scenarios from the area of Augmented Reality. |

Global Localization from Monocular SLAM on a Mobile PhoneA Details: IEEE Virtual Reality Conference (VR), March 29 - April 2, 2014. We propose the combination of a keyframe-based monocular SLAM system and a global localization method. The SLAM system runs locally on a camera-equipped mobile client and provides continuous, relative 6DoF pose estimation as well as keyframe images with computed camera locations. As the local map expands, a server process localizes the keyframes with a pre-made, globally-registered map and returns the global registration correction to the mobile client. The localization result is updated each time a keyframe is added, and observations of global anchor points are added to the client-side bundle adjustment process to further refine the SLAM map registration and limit drift. The end result is a 6DoF tracking and mapping system which provides globally registered tracking in real-time on a mobile device, overcomes the difficulties of localization with a narrow field-of-view mobile phone camera, and is not limited to tracking only in areas covered by the offline reconstruction. |

Instant Outdoor Localization and SLAM Initalization from 2.5D Maps.Authors: Clemens Arth, Christian Pirchheim, Jonathan Ventura, Dieter Schmalstieg and Vincent Lepetit Details: IEEE Int. Symposium on Mixed and Augmented Reality (ISMAR), September 27 - October 3, 2015 We present a method for large-scale geo-localization and global tracking of mobile devices in urban outdoor environments. In contrast to existing methods, we instantaneously initialize and globally register a SLAM map by localizing the first keyframe with respect to widely available untextured 2.5D maps. Given a single image frame and a coarse sensor pose prior, our localization method estimates the absolute camera orientation from straight line segments and the translation by aligning the city map model with a semantic segmentation of the image. We use the resulting 6DOF pose, together with information inferred from the city map model, to reliably initialize and extend a 3D SLAM map in a global coordinate system, applying a model-supported SLAM mapping approach. We show the robustness and accuracy of our localization approach on a challenging dataset, and demonstrate unconstrained global SLAM mapping and tracking of arbitrary camera motion on several sequences. |

- Home

- People

- Research - Projects

- Publications

- Software - Media

uthors: Jonathan Ventura, Clemens Arth, Gerhard Reitmayr, Dieter Schmalstieg

uthors: Jonathan Ventura, Clemens Arth, Gerhard Reitmayr, Dieter Schmalstieg