3D Pose Estimation and 3D Model Retrieval for Objects in the Wild

Overview

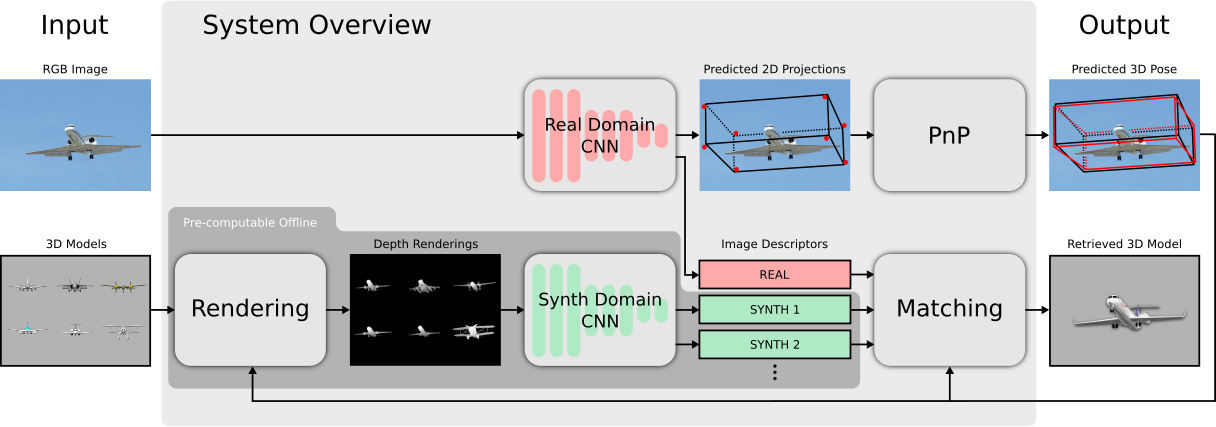

We present a scalable approach to retrieve 3D models for objects in the wild. Our method builds on the fact that knowing the object pose significantly reduces the complexity of the task. In particular, we first detect objects in RGB images and predict their 3D poses. For each detected object, we then render a number of candidate 3D models under the predicted pose. Finally, we identify the closest 3D model by matching an image descriptor computed from the detected object against image descriptors computed from the renderings. In this work, we extract image descriptors using CNNs, which are specifically trained for this task.

Results

To demonstrate our 3D model retrieval approach for objects in the wild, we evaluate it in a realistic setup where we retrieve 3D models from ShapeNet given unseen RGB images from Pascal3D+. In particular, we train our 3D model retrieval approach purely on data from Pascal3D+, but use it to retrieve 3D models from ShapeNet.

Our approach predicts accurate 3D poses and 3D models for objects of different categories. In some cases, our predicted pose (see sofa) or our retrieved model from ShapeNet (see aeroplane and chair) are even more accurate than the annotated ground truth from Pascal3D+. Details are provided in the paper.

This work was supported by the Christian Doppler Laboratory for Semantic 3D Computer Vision, funded in part by Qualcomm Inc.

Corresponding Publication(s):

Efficient 3D Pose Estimation and 3D Model Retrieval

Alexander Grabner, Peter M. Roth and Vincent Lepetit

In Proc. Austrian Association of Pattern Recognition Workshop (OAGM), 2018

[paper] [bib] [project]

3D Pose Estimation and 3D Model Retrieval for Objects in the Wild

Alexander Grabner, Peter M. Roth and Vincent Lepetit

In Proc. Computer Vision and Pattern Recognition (CVPR), 2018

[paper] [bib] [project]