Time-of-Flight Imaging

Although the ToF measurement principle is well understood, and technical implementations are already available for several years, its measurement quality and range of possible applications has reached a barrier, governed by physical limitations, and practical shortcomings like excessive power consumption. Research in this field is to some extent only incremental and mostly conducted by scientists coming from signal-processing, noise filtering and resolution enhancement.

In the course of this project, we follow a radically different approach. We combine robust and recent computer vision methods like energy optimization and machine learning with lowlevel signals from the ToF sensor itself, different sensor modalities and time-sequential sensing. This essential combination and alignment of hardware and software R&D will be made possible by a new close cooperation with our worldwide renowned project partner Infineon Technologies. By that approach the sensor performance in terms of power consumption, signal quality and robustness will be drastically increased to a level, which will make new applications in mobile-, entertainment-, and automotive devices feasible.

We consider this approach especially fruitful for the newest generation of ToF devices, which finally can keep the promises of their predecessors: low package size and low price. We will address the even lower signal quality of these devices implicitly, circumventing the tedious and always incomplete approach of modeling every error source on its own. The idea is to exploit the possibilities of low-level access to ToF imaging data, in order to modify the sensor parameters in real-time, obtain time-sequential measurements, and obtain a-priori knowledge of the scene to implicitly model the measurement chain and retrieve optimal measurement results.

In the course of this project, we follow a radically different approach. We combine robust and recent computer vision methods like energy optimization and machine learning with lowlevel signals from the ToF sensor itself, different sensor modalities and time-sequential sensing. This essential combination and alignment of hardware and software R&D will be made possible by a new close cooperation with our worldwide renowned project partner Infineon Technologies. By that approach the sensor performance in terms of power consumption, signal quality and robustness will be drastically increased to a level, which will make new applications in mobile-, entertainment-, and automotive devices feasible.

We consider this approach especially fruitful for the newest generation of ToF devices, which finally can keep the promises of their predecessors: low package size and low price. We will address the even lower signal quality of these devices implicitly, circumventing the tedious and always incomplete approach of modeling every error source on its own. The idea is to exploit the possibilities of low-level access to ToF imaging data, in order to modify the sensor parameters in real-time, obtain time-sequential measurements, and obtain a-priori knowledge of the scene to implicitly model the measurement chain and retrieve optimal measurement results.

Real-Time Hand Gesture Recognition and Human Computer Interaction using depth cameras

We present a novel technique using a range camera for real-time recognition of the hand gesture and position in 3D. Simultaneously the user's hand and head pose are tracked and used for interaction in a virtual 3D desktop environment. As human gestures provide a natural way of communications between hu- mans, gesture recognition is a major field of research used also for human- computer communication. Most existing hand interaction systems are restricted to a 2D touch-sensitive plane, or track and recognize the hand gesture on color images. Due to the lack of depth information, these systems are limited to the 2D space only, provide little depth invariance and are sensitive to rotation and segmentation errors, whereas we built a system for bare-hand 3D interaction, independent from hand rotation and depth.

Contact: Matthias Rüther

Paper

Contact: Matthias Rüther

Paper

Variational Depth Superresolution using Example-Based Edge Representations

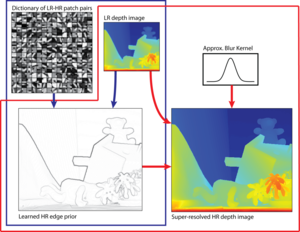

In this work we propose a novel method for depth image superresolution which combines recent advances in example based upsampling with variational superresolution based on a known blur kernel. Most traditional depth superresolution approaches try to use additional high resolution intensity images as guidance for superresolution. In our method we learn a dictionary of edge priors from an external database of high and low resolution examples. In a novel variational sparse coding approach this dictionary is used to infer strong edge priors. Additionally to the traditional sparse coding constraints the difference in the overlap of neighboring edge patches is minimized in our optimization. These edge priors are used in a novel variational superresolution as anisotropic guidance of a higher order regularization. Both the sparse coding and the variational superresolution of the depth are solved based on the primal-dual formulation. In an exhaustive numerical and visual evaluation we show that our method clearly outperforms existing approaches on multiple real and synthetic datasets.

Contact: Matthias Rüther

Paper

Supplemental Material

Middlebury Evaluation Results

| Variational Depth Superresolution using Example-Based Edge Representations. Our method estimates strong edge priors from a given LR depth image and a learned dictionary using a novel sparse coding approach (blue part). The learned HR edge prior is used as anisotropic guidance in a novel variational SR using higher order regularization (red part). |

Dense Variational Scene Flow from RGB-D Data

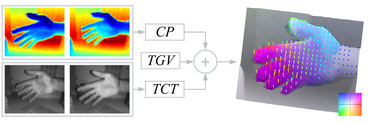

We present a novel method for dense variational scene flow estimation based a multiscale Ternary Census Transform in combination with a patchwise Closest Points depth data term. On the one hand, the Ternary Census Transform in the intensity data term is capable of handling illumination changes, low texture and noise. On the other hand, the patchwise Closest Points search in the depth data term increases the robustness in low structured regions. Further, we utilize higher order regularization which is weighted and directed according to the input data by an anisotropic diffusion tensor. This allows to calculate a dense and accurate flow field which supports smooth as well as non-rigid movements while preserving flow boundaries. The numerical algorithm is solved based on a primal-dual formulation and is efficiently parallelized to run at high frame rates. In an extensive qualitative and quantitative evaluation we show that this novel method for scene flow calculation outperforms existing approaches. The method is applicable to any sensor delivering dense depth and intensity data such as Microsoft Kinect or Intel Gesture Camera

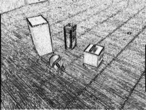

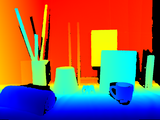

The scene flow is estimated from two consecutive depth and intensity acquisitions. The depth data term is calculated as patchwise Closest Point (CP) search and the intensity data term is calculated as Ternary Census Transform (TCT). For regularization we propose an anisotropic Total Generalized Variation (TGV). The flow is visualized as a color coded X;Y map (motion key in the bottom right). The Z component is shown as arrows colored according to their magnitude.

Contact: Christian Reinbacher, Gernot Riegler, Matthias Rüther

aTGV-SF: Dense Variational Scene Flow through Projective Warping and Higher Order Regularization [bib]

The scene flow is estimated from two consecutive depth and intensity acquisitions. The depth data term is calculated as patchwise Closest Point (CP) search and the intensity data term is calculated as Ternary Census Transform (TCT). For regularization we propose an anisotropic Total Generalized Variation (TGV). The flow is visualized as a color coded X;Y map (motion key in the bottom right). The Z component is shown as arrows colored according to their magnitude.

Contact: Christian Reinbacher, Gernot Riegler, Matthias Rüther

aTGV-SF: Dense Variational Scene Flow through Projective Warping and Higher Order Regularization [bib]

Paper, Matlab Code, Results CP-Census: A Novel Model for Dense Variational Scene Flow from RGB-D Data [bib] Paper, Results Matlab Evaluation Script

The scene flow is estimated from two consecutive depth and intensity acquisitions. The depth data term is calculated as patchwise Closest Point (CP) search and the intensity data term is calculated as Ternary Census Transform (TCT). For regularization we propose an anisotropic Total Generalized Variation (TGV). The flow is visualized as a color coded X;Y map (motion key in the bottom right). The Z component is shown as arrows colored according to their magnitude.

Contact: Christian Reinbacher, Gernot Riegler, Matthias Rüther

aTGV-SF: Dense Variational Scene Flow through Projective Warping and Higher Order Regularization [bib]

The scene flow is estimated from two consecutive depth and intensity acquisitions. The depth data term is calculated as patchwise Closest Point (CP) search and the intensity data term is calculated as Ternary Census Transform (TCT). For regularization we propose an anisotropic Total Generalized Variation (TGV). The flow is visualized as a color coded X;Y map (motion key in the bottom right). The Z component is shown as arrows colored according to their magnitude.

Contact: Christian Reinbacher, Gernot Riegler, Matthias Rüther

aTGV-SF: Dense Variational Scene Flow through Projective Warping and Higher Order Regularization [bib]Paper, Matlab Code, Results CP-Census: A Novel Model for Dense Variational Scene Flow from RGB-D Data [bib] Paper, Results Matlab Evaluation Script

Image Guided Depth Map Upsampling

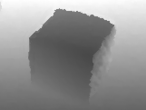

In this project we present a novel method for the challenging problem of depth image upsampling. Modern depth cameras such as Kinect or ToF cameras deliver dense, high quality depth measurements but are limited in their lateral resolution. To overcome this limitation we formulate a convex optimization problem using higher order regularization for depth image upsampling. In this optimization an anisotropic diffusion tensor, calculated from a high resolution intensity image, is used to guide the upsampling. We derive a numerical algorithm based on a primal-dual formulation that is efficiently parallelized and runs at multiple frames per second. We show that this novel upsampling clearly outperforms state of the art approaches in terms of speed and accuracy on the widely used Middlebury 2007 datasets. Furthermore, we introduce novel datasets with highly accurate groundtruth, which, for the first time, enable to benchmark depth upsampling methods using real sensor data.

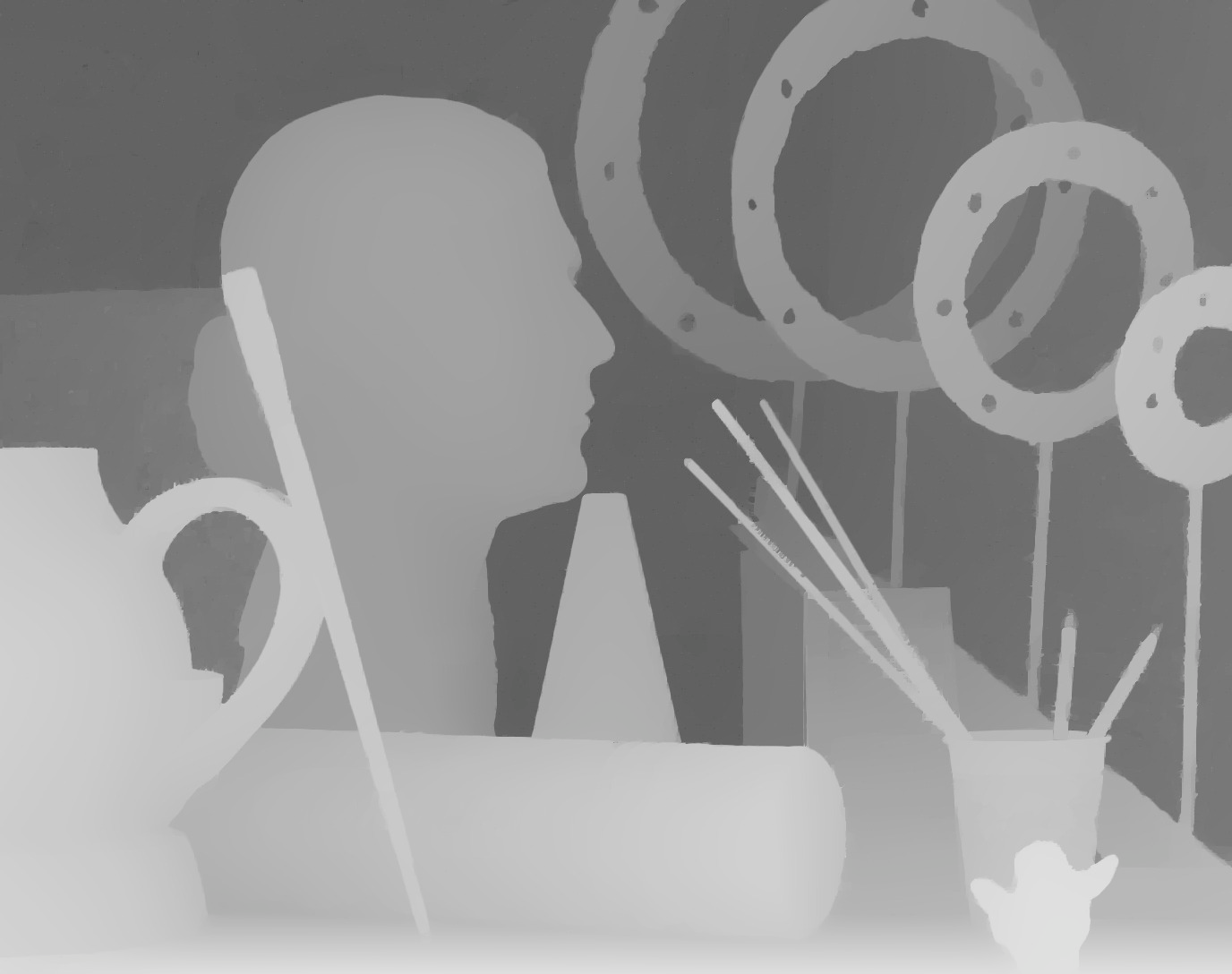

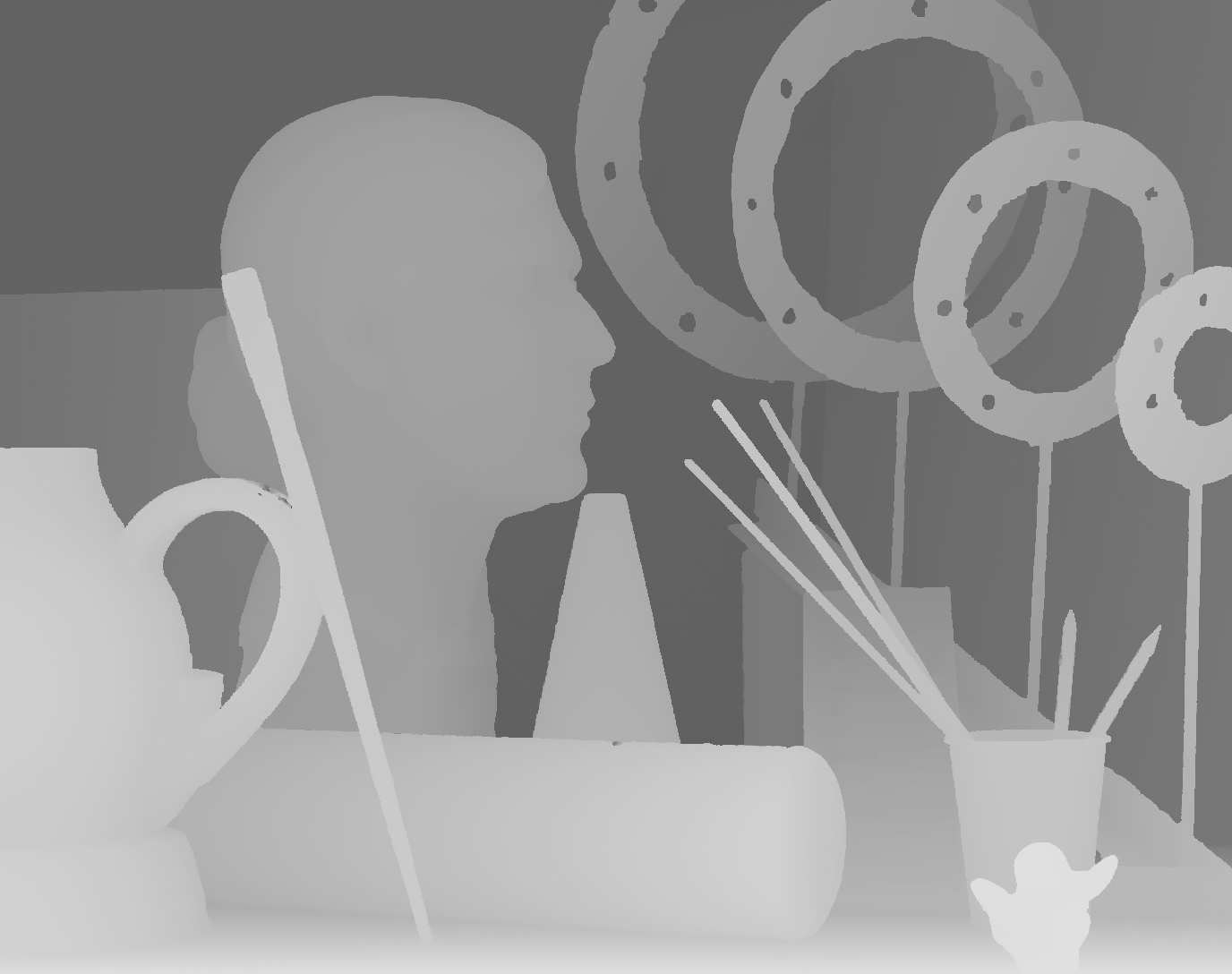

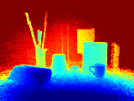

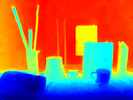

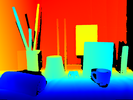

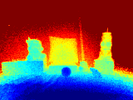

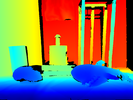

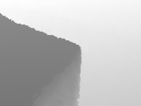

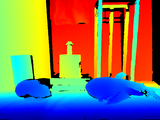

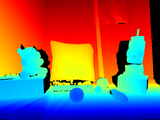

Upsampling of a low resolution depth image using an additional high resolution intensity image through image guided anisotropic Total Generalized Variation. Depth maps are color coded for better visualization.

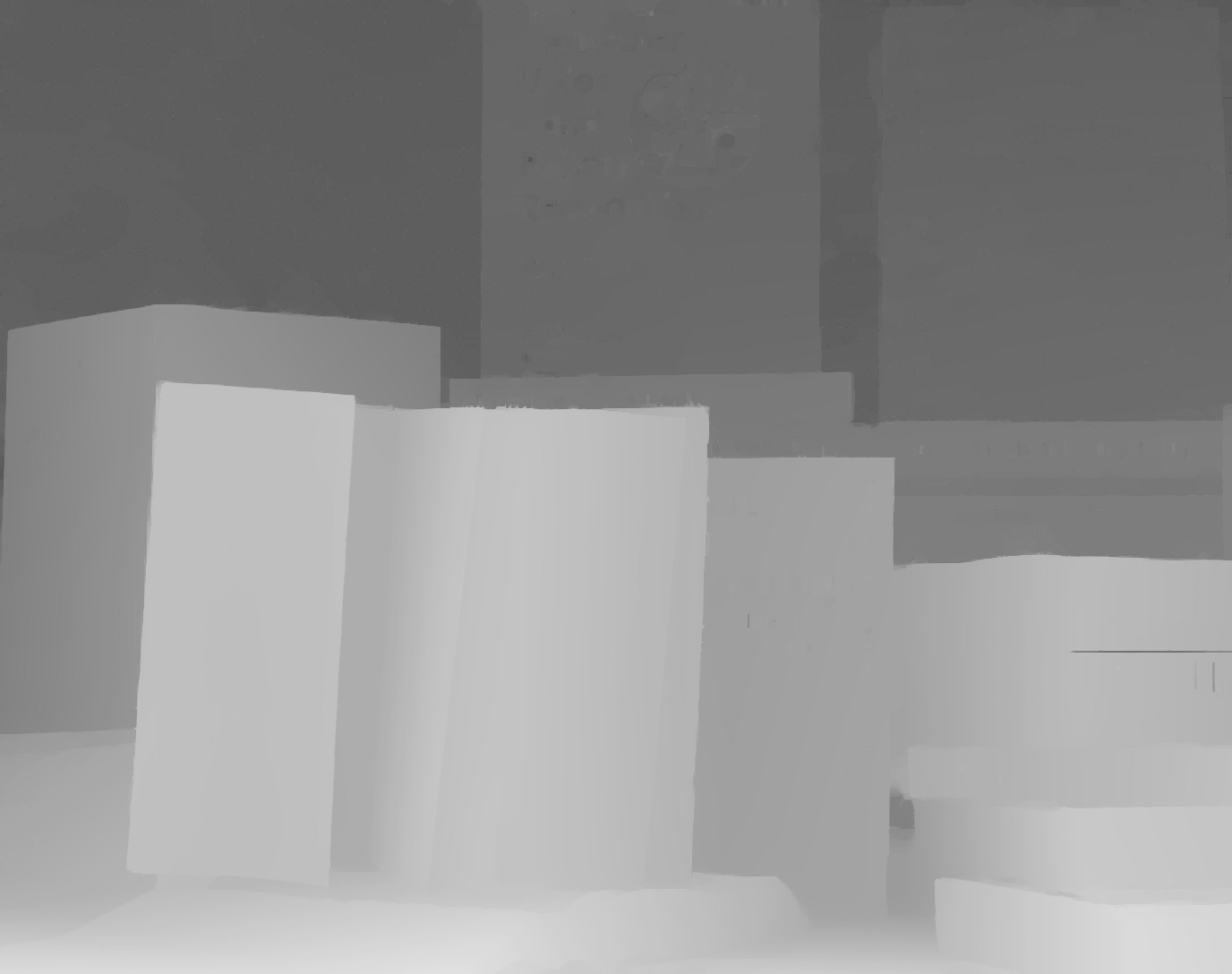

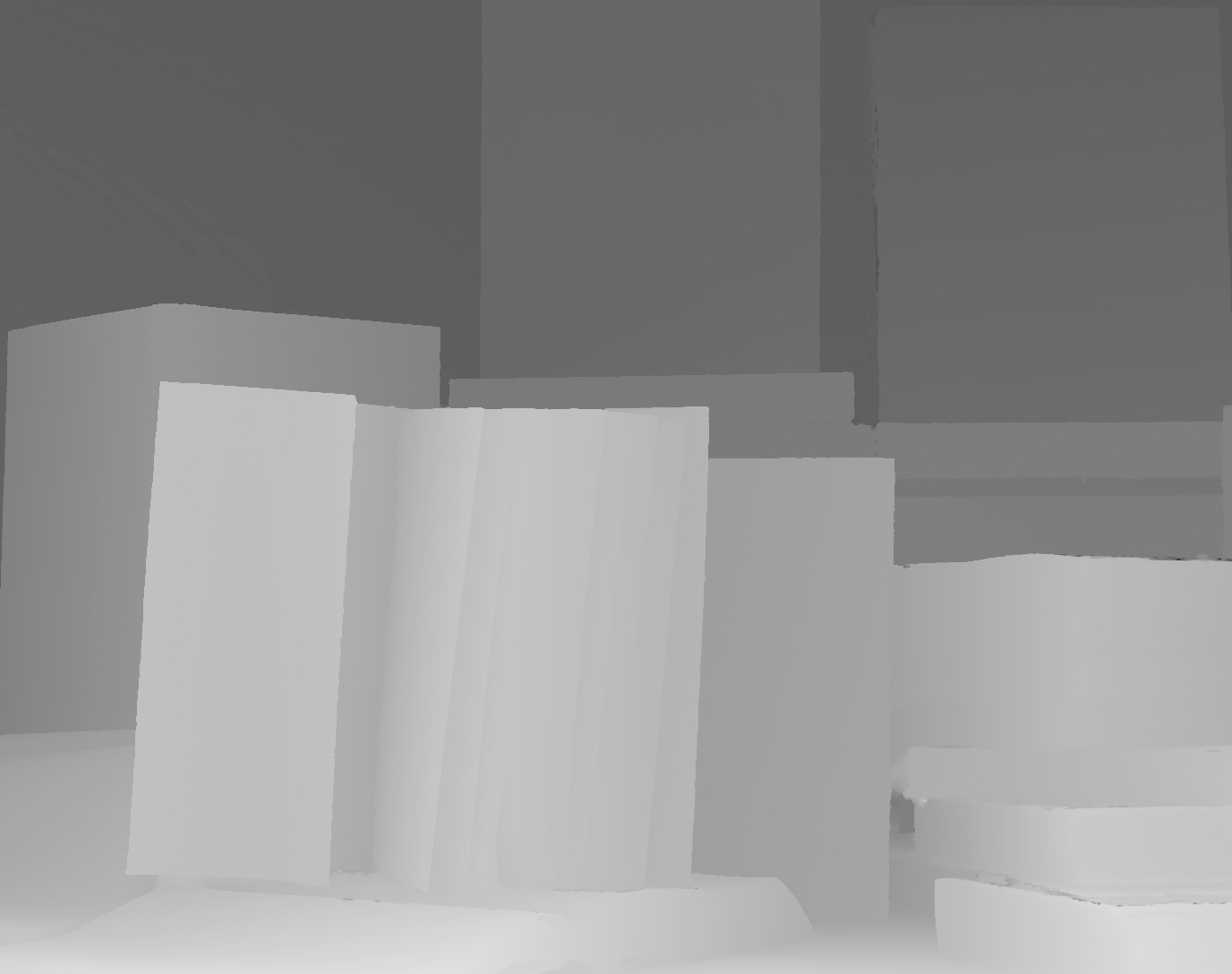

Visual evaluation of x4 upsampling on the Middlebury 2007 dataset. Images form left to right: low resolution depth input of size 172x136 with added noise, high resolution intensity image of size 1390x1110, upsampling result after Image Guided Depth Upsampling of size 1390x1110, groundtruth depth image.

IMPORTANT: All errors in the table are given as Mean Absolute Error (MAE). Not as RMSE as reported in the paper!!

IMPORTANT: All errors in the table are given as Mean Absolute Error (MAE). Not as RMSE as reported in the paper!!

The RMSE results can be found in the table below. Qualitative Middlebury evaluation measured as Root Mean Squared Error (RMSE).

[1] Q. Yang, R. Yang, J. Davis, and D. Nister. Spatial-depth super resolution for range images. In Proc. CVPR, 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] J. Diebel and S. Thrun. An application of markov random fields to range sensing. In Proc. NIPS, 2006.

[4] D. Chan, H. Buisman, C. Theobalt, and S. Thrun. A Noise-Aware Filter for Real-Time Depth Upsampling. In Proc. ECCV Workshops, 2008.

[5] J. Park, H. Kim, Y.-W. Tai, M. Brown, and I. Kweon. High quality depth map upsampling for 3d-tof cameras. In Proc. ICCV, 2011.

[6] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Qualitative Middlebury evaluation measured as Root Mean Squared Error (RMSE).

[1] Q. Yang, R. Yang, J. Davis, and D. Nister. Spatial-depth super resolution for range images. In Proc. CVPR, 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] J. Diebel and S. Thrun. An application of markov random fields to range sensing. In Proc. NIPS, 2006.

[4] D. Chan, H. Buisman, C. Theobalt, and S. Thrun. A Noise-Aware Filter for Real-Time Depth Upsampling. In Proc. ECCV Workshops, 2008.

[5] J. Park, H. Kim, Y.-W. Tai, M. Brown, and I. Kweon. High quality depth map upsampling for 3d-tof cameras. In Proc. ICCV, 2011.

[6] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

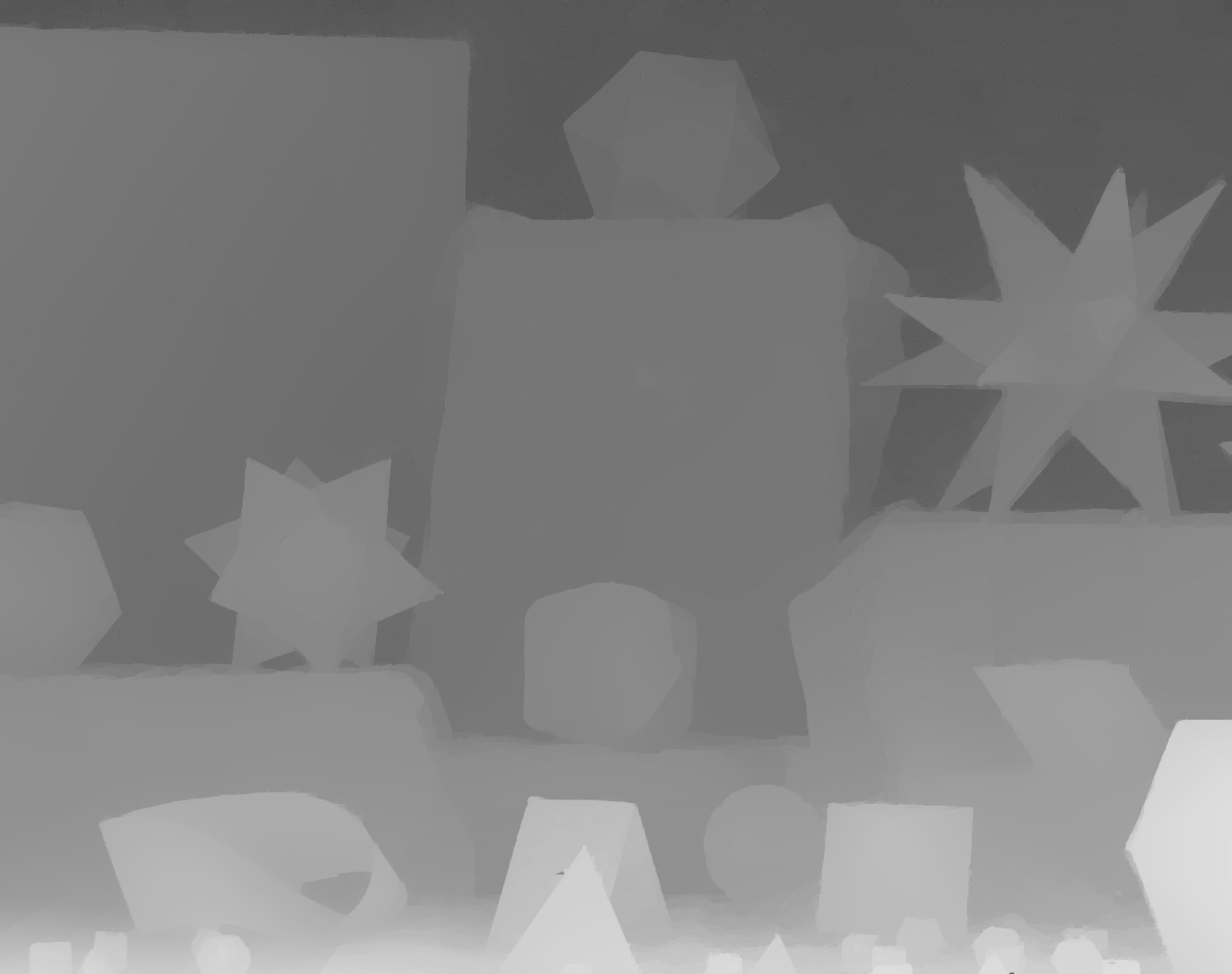

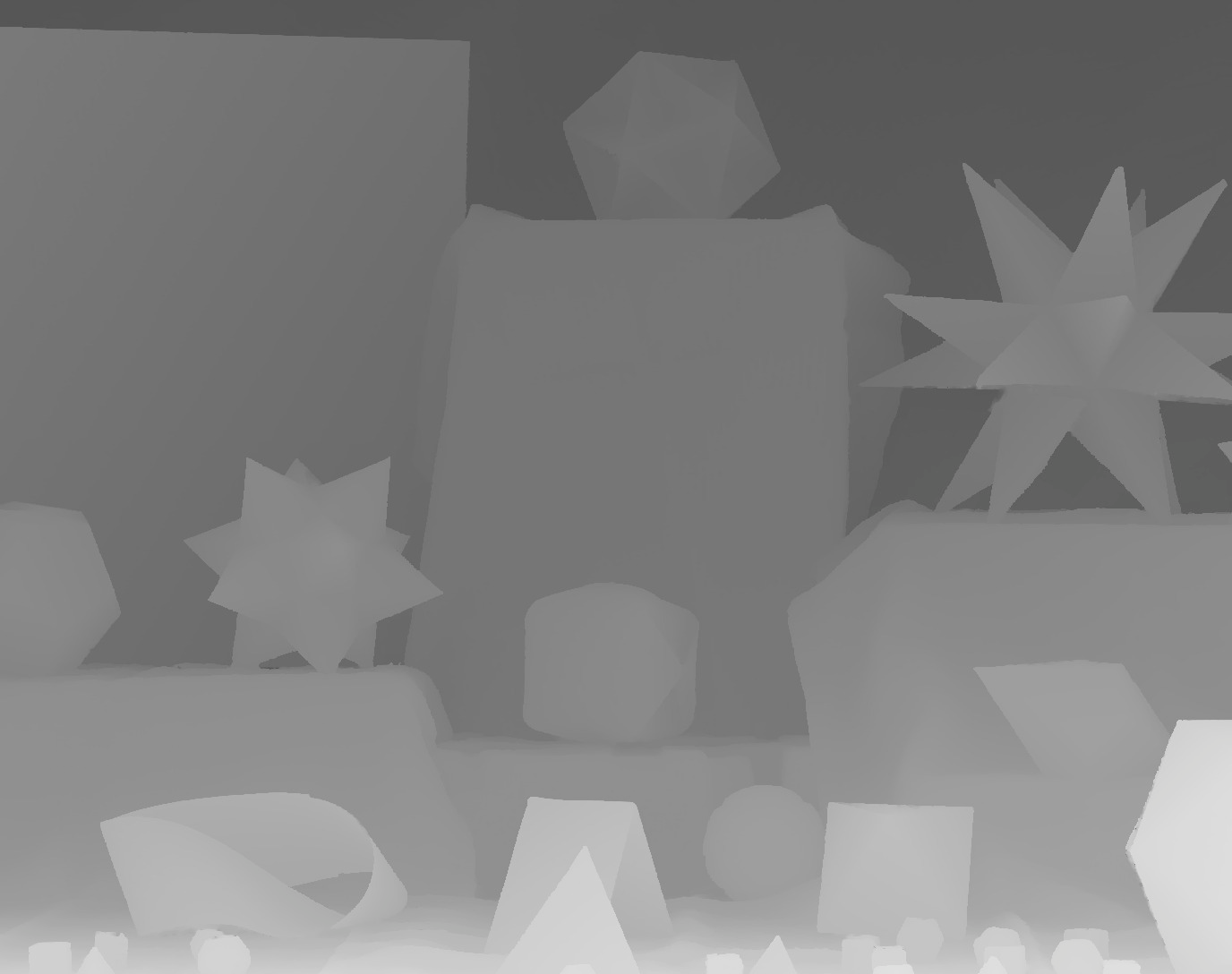

Visual evaluation of approximately x6.25 upsampling on our novel real-world dataset available for download at the ToFMark depth upsampling evaluation page. Images form left to right: low resolution depth input image from a PMD Nano Time-of-Flight camera of size 160x120 with added noise, high resolution intensity image of size 810x610, upsampling result after Image Guided Depth Upsampling of size 810x610, groundtruth depth image acquired by a high accuracy structured light scanner.

[1] J. Kopf, M. F. Cohen, D. Lischinski, and M. Uyttendaele. Joint bilateral upsampling. ACM Transactions on Graphics, 26(3), 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Image Guided Depth Upsampling using Anisotropic Total Generalized Variation [bib]

[1] J. Kopf, M. F. Cohen, D. Lischinski, and M. Uyttendaele. Joint bilateral upsampling. ACM Transactions on Graphics, 26(3), 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Image Guided Depth Upsampling using Anisotropic Total Generalized Variation [bib]

Contact: Christian Reinbacher, Matthias Rüther

|  |  |  |

|---|

Synthetic Data

|  |  |  |

|  |  |  |

|  |  |  |

The RMSE results can be found in the table below.

Evaluation on TofMark Dataset

|  |  |  |

|  |  |  |

|  |  |  |

Contact: Christian Reinbacher, Matthias Rüther

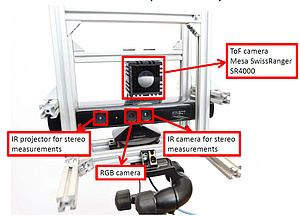

Multi-Modality Depth Map Fusion

In this project we present a novel fusion method that combines complementary 3D and 2D imaging techniques. Consider a Time-of-Flight sensor that acquires a dense depth map on a wide stereo sensor generates a disparity map in high resolution but with occlusions and outliers. In our method, we fuse depth data,and optionally also intensity data using a primal-dual optimization, with an energy functional that is designed to compensate for missing parts, filter strong outliers and reduce the acquisition noise. The numerical algorithm is efficiently implemented on a GPU to achieve a processing speed of 10 to 15 frames per second. Experiments on synthetic, real and benchmark datasets show that the results are superior com pared to each sensor alone and to competing optimization techniques. In a practical example, we are able to fuse a Kinect triangulation sensor and a small size Time-of-Flight camera to create a gaming sensor with superior resolution, acquisition range and accuracy.

A scene acquired by a low resolution ToF and a high-resolution stereo sensor is fused into one high resolution depth map. To preserve sharp edges and further reduce noise a 2D intensity image is used as an additional depth cue through an anisotropic diffusion tensor.

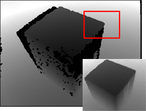

Evaluation of a Kinect-ToF depth sensor fusion. The first column shows the RGB intensity image and column two the tensor magnitude. The third column shows the Kinect and ToF depth map where a small part (marked in red) of the result is magnified for better visualization (column four).

Contact: Matthias Rüther

Multi-Modality Depth Map Fusion using Primal-Dual Optimization [bib]

|  |

|

|

|  |  |  |

|  |  |  |

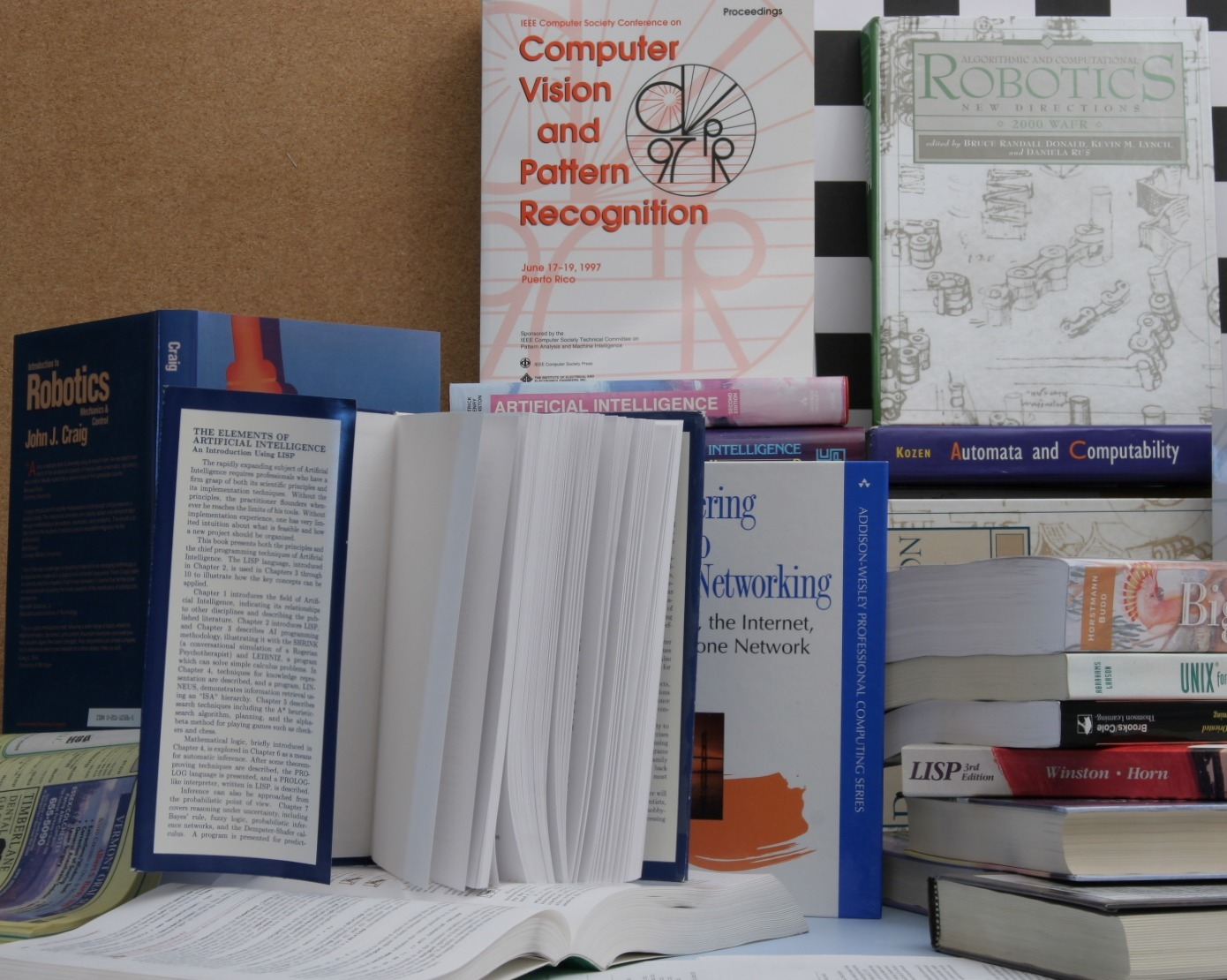

ToFMark - A Depth Upsampling Evaluation Dataset

Welcome to the ToFMark evaluation page. This website is built to validate, compare and evaluate different depth image upsampling/superresolution algorithms. The basic principle is to use a low-resolution Time-of-Flight (ToF) depth acquisition together with a high-resolution intensity image.

Because depth and intensity data do not originate from the same sensor, these depth and intensity information are not aligned. Each ToFMark validation dataset includes:

[1] J. Kopf, M. F. Cohen, D. Lischinski, and M. Uyttendaele. Joint bilateral upsampling. ACM Transactions on Graphics, 26(3), 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Image Guided Depth Upsampling using Anisotropic Total Generalized Variation[bib]

Contact: Christian Reinbacher, Matthias Rüther

Because depth and intensity data do not originate from the same sensor, these depth and intensity information are not aligned. Each ToFMark validation dataset includes:

- a low-resolution offset corrected ToF depth and infrared image

- a high-resolution intensity image

- the ToF and intensity camera matrices

- the sparse ToF depth mapped to the intensity camera system and

- the correponding high-resolution ground-truth depth

Books Dataset | Shark Dataset | Devil Dataset |

|---|---|---|

|  |  |

|  |  |

|  |  |

| download Books dataset | download Shark dataset | download Devil dataset |

Evaluation

| Algorithm | Books RMSE[mm] | Shark RMSE[mm] | Devil RMSE[mm] |

|---|---|---|---|

| Nearest Neighbor | 18.21 | 21.83 | 19.36 |

| Bilinear | 17.10 | 20.17 | 18.66 |

| Kopf [1] | 16.03 | 18.79 | 27.57 |

| He [2] | 15.74 | 18.21 | 27.04 |

| OURS [3] | 12.36 | 15.29 | 14.68 |

Robot Vision

News

2016/12/16: New Open Student Position: LIDAR Metrology Tooling

--> Learn More2016/12/01: New Open Student Position: Robotic Charging of Electric Vehicles

--> Learn More2016/07/15: Accepted to BMVC 2016

Our paper "A Deep Primal-Dual Network for Guided Depth Super-Resolution" has been accepted for oral presentation at the British Machine Vision Conference 2016 held at the University of York, United Kingdom.

2016/07/11: Accepted to ECCV 2016

Our paper "ATGV-Net: Accurate Depth Superresolution" has been accepted at the European Conference on Computer Vision 2016 in Amsterdam, The Netherlands.

2015/10/07: Accepted to ICCV 2015 Workshop: TASK-CV

Our paper "Anatomical landmark detection in medical applications driven by synthetic data" has been accepted at the IEEE International Conference on Computer Vision 2015 workshop on transferring and adapting source knowledge in computer vision.

2015/09/14: Camera calibration code online

The camera calibration toolbox accompanying our paper "Learning Depth Calibration of Time-of-Flight Cameras" is available here.

2015/09/07: Accepted to ICCV 2015

Our papers "Variational Depth Superresolution using Example-Based Edge Representations" and "Conditioned Regression Models for Non-Blind Single Image Super-Resolution" have been accepted at the IEEE International Conference on Computer Vision 2015, December 13-16, Santiago, Chile.

2015/07/03: Accepted to BMVC 2015

Our papers "Depth Restoration via Joint Training of a Global Regression Model and CNNs" and "Learning Depth Calibration of Time-of-Flight Cameras" have been accepted as a poster presentation at the 26th British Machine Vision Conference, September 7-10, Swansea, United Kingdom.