Conditioned Regression Models for Non-Blind Single Image Super-Resolution

Single image super-resolution is an important task in the field of computer vision and finds many practical applications. Current state-of-the-art methods typically rely on machine learning algorithms to infer a mapping from low- to high-resolution images. These methods use a single fixed blur kernel during training and, consequently, assume the exact same kernel underlying the image formation process for all test images. However, this setting is not realistic for practical applications, because the blur is typically different for each test image. In this paper, we loosen this restrictive constraint and propose conditioned regression models (including convolutional neural networks and random forests) that can effectively exploit the additional kernel information during both, training and inference. This allows for training a single model, while previous methods need to be re-trained for every blur kernel individually to achieve good results, which we demonstrate in our evaluations. We also empirically show that the proposed conditioned regression models (i) can effectively handle scenarios where the blur kernel is different for each image and (ii) outperform related approaches trained for only a single kernel.

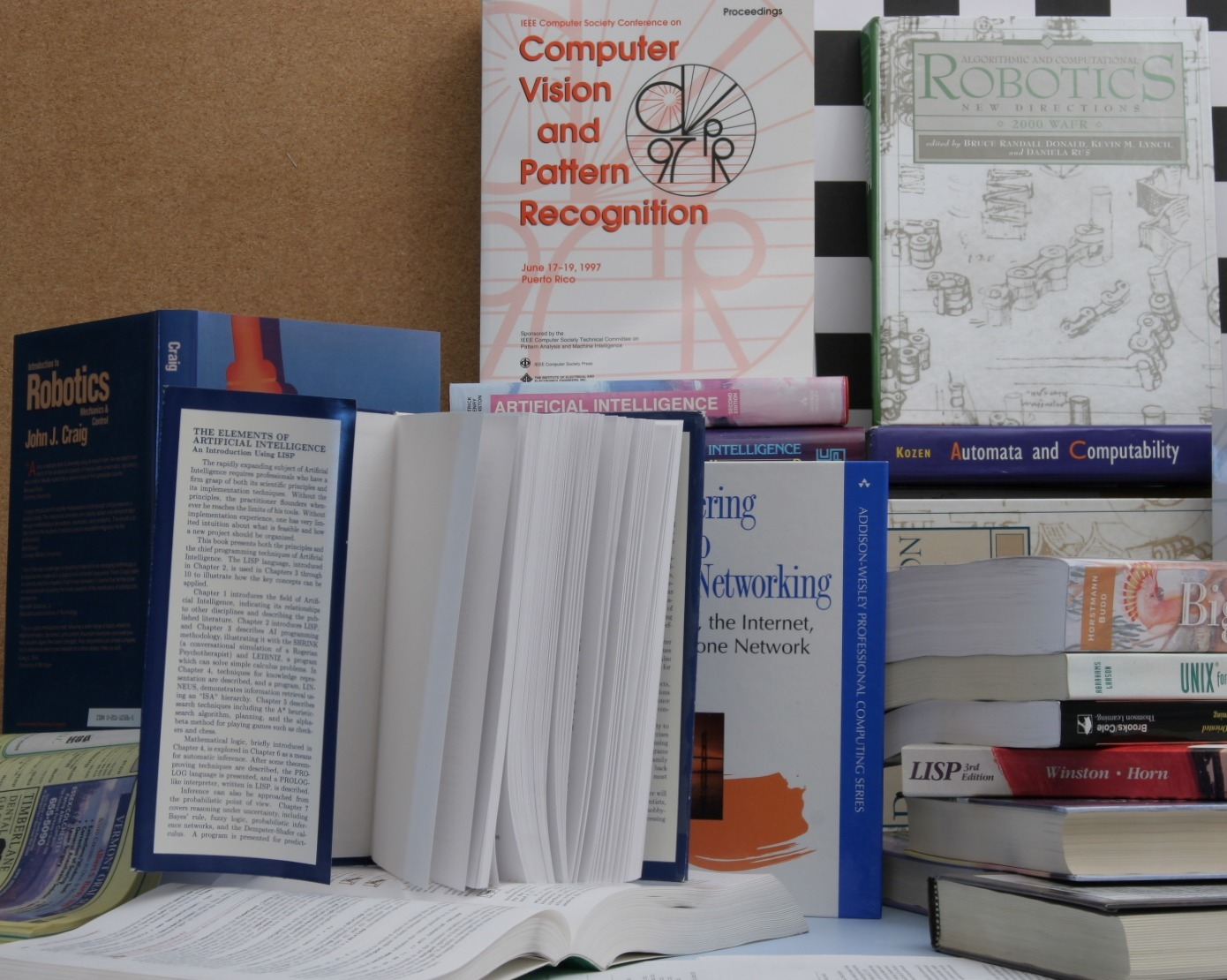

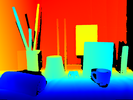

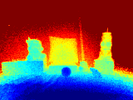

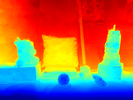

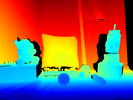

Low-resolution images can underlie different blur kernels in their formation process and should be treated accordingly. This figure illustrates the effect of a wrong blur kernel assumption on the super-resolution quality (the original blur kernel is depicted in the top left corner of the first image). Not only standard bicubic upscaling (second image) but also state-of-the-art methods like A+ (third image) trained on a wrong kernel yield clearly inferior results compared to our conditioned regression model (SRF-CAB, fourth image).

Conditioned Regression Models for Non-Blind Single Image Super-Resolution [bib] [supp] [poster]

Contact: Gernot Riegler, Matthias Rüther

http://rvlab.icg.tugraz.at/project_page/project_tofusion/downloads/ICCV15_MB_results.tar.gz

|  |  |  |

|---|

Variational Depth Superresolution using Example-Based Edge Representations

In this work we propose a novel method for depth image superresolution which combines recent advances in example based upsampling with variational superresolution based on a known blur kernel. Most traditional depth superresolution approaches try to use additional high resolution intensity images as guidance for superresolution. In our method we learn a dictionary of edge priors from an external database of high and low resolution examples. In a novel variational sparse coding approach this dictionary is used to infer strong edge priors. Additionally to the traditional sparse coding constraints the difference in the overlap of neighboring edge patches is minimized in our optimization. These edge priors are used in a novel variational superresolution as anisotropic guidance of a higher order regularization. Both the sparse coding and the variational superresolution of the depth are solved based on the primal-dual formulation. In an exhaustive numerical and visual evaluation we show that our method clearly outperforms existing approaches on multiple real and synthetic datasets.

Contact: Matthias Rüther

Paper

Supplemental Material

Middlebury Evaluation Results

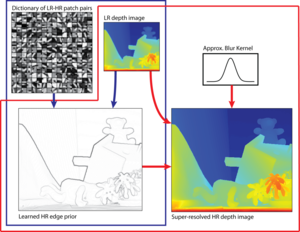

| Variational Depth Superresolution using Example-Based Edge Representations. Our method estimates strong edge priors from a given LR depth image and a learned dictionary using a novel sparse coding approach (blue part). The learned HR edge prior is used as anisotropic guidance in a novel variational SR using higher order regularization (red part). |

Image Guided Depth Map Upsampling

In this project we present a novel method for the challenging problem of depth image upsampling. Modern depth cameras such as Kinect or ToF cameras deliver dense, high quality depth measurements but are limited in their lateral resolution. To overcome this limitation we formulate a convex optimization problem using higher order regularization for depth image upsampling. In this optimization an anisotropic diffusion tensor, calculated from a high resolution intensity image, is used to guide the upsampling. We derive a numerical algorithm based on a primal-dual formulation that is efficiently parallelized and runs at multiple frames per second. We show that this novel upsampling clearly outperforms state of the art approaches in terms of speed and accuracy on the widely used Middlebury 2007 datasets. Furthermore, we introduce novel datasets with highly accurate groundtruth, which, for the first time, enable to benchmark depth upsampling methods using real sensor data.

Upsampling of a low resolution depth image using an additional high resolution intensity image through image guided anisotropic Total Generalized Variation. Depth maps are color coded for better visualization.

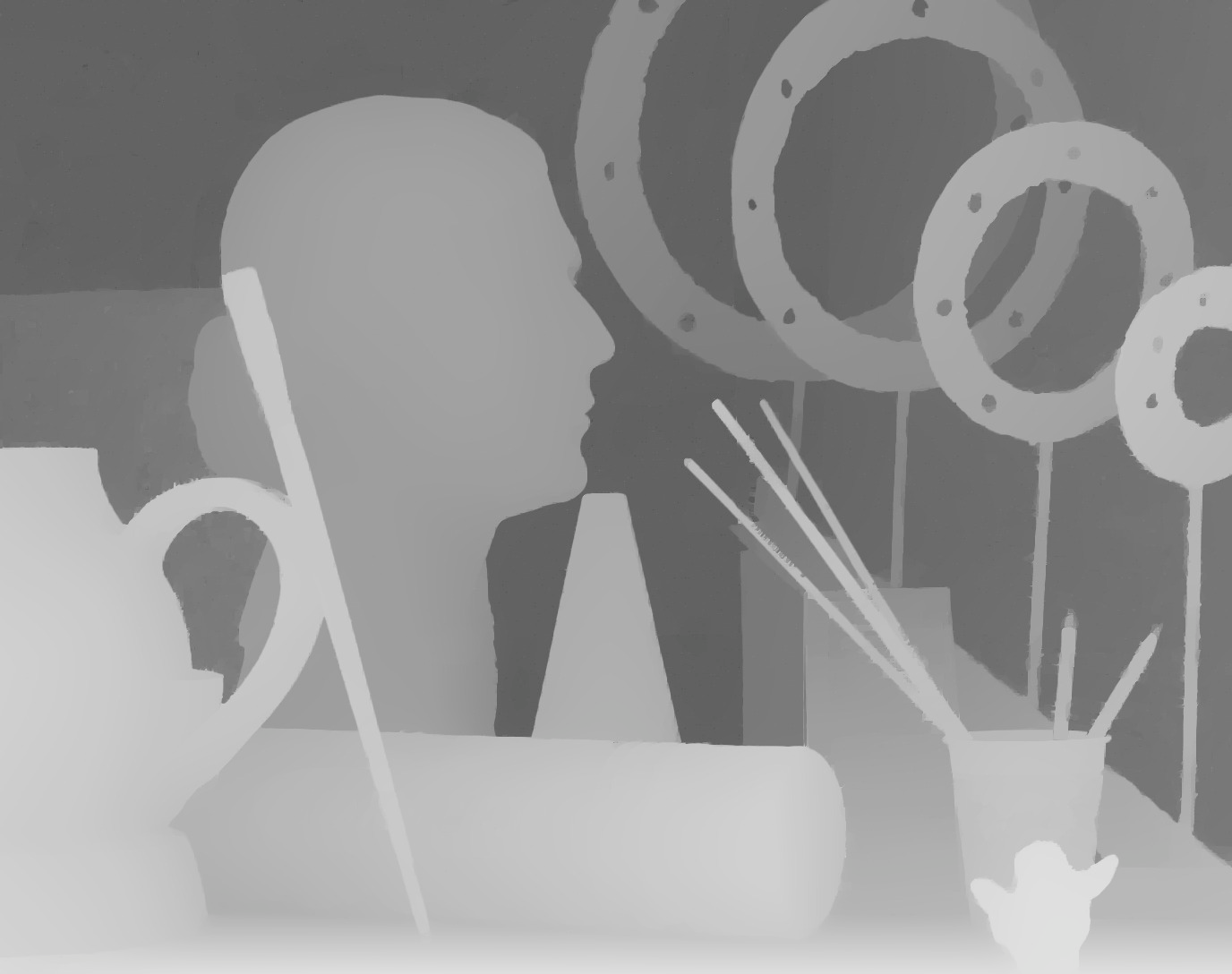

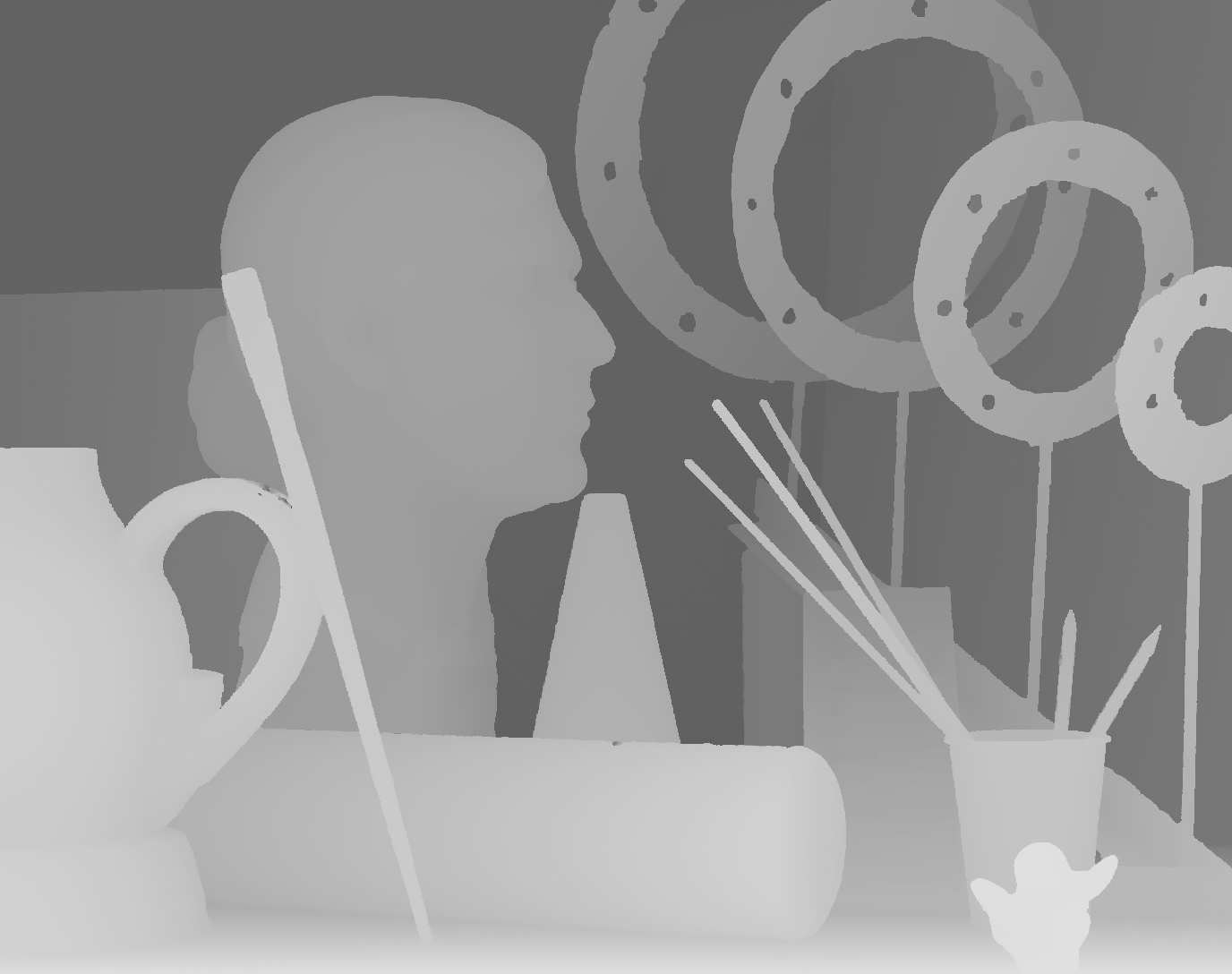

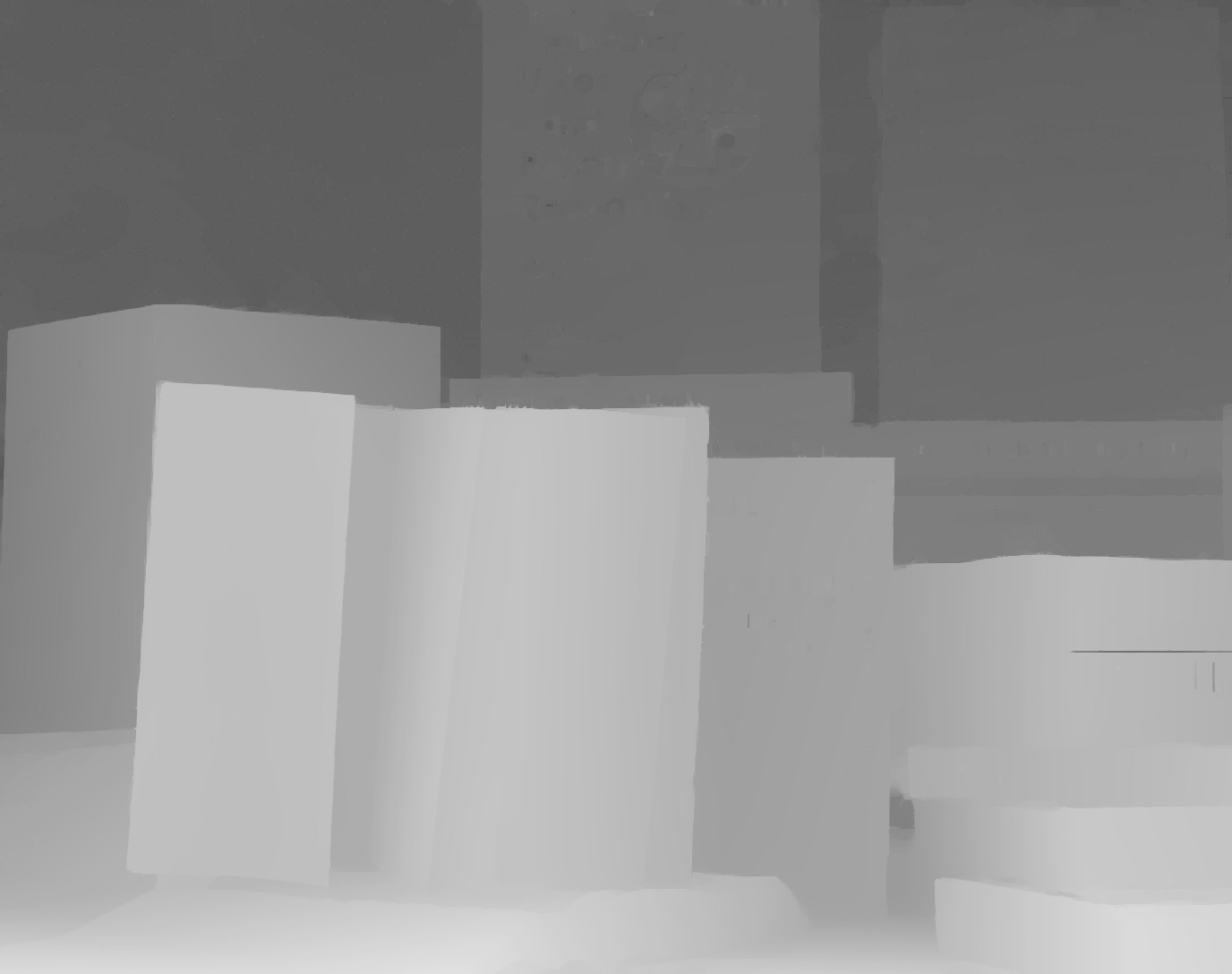

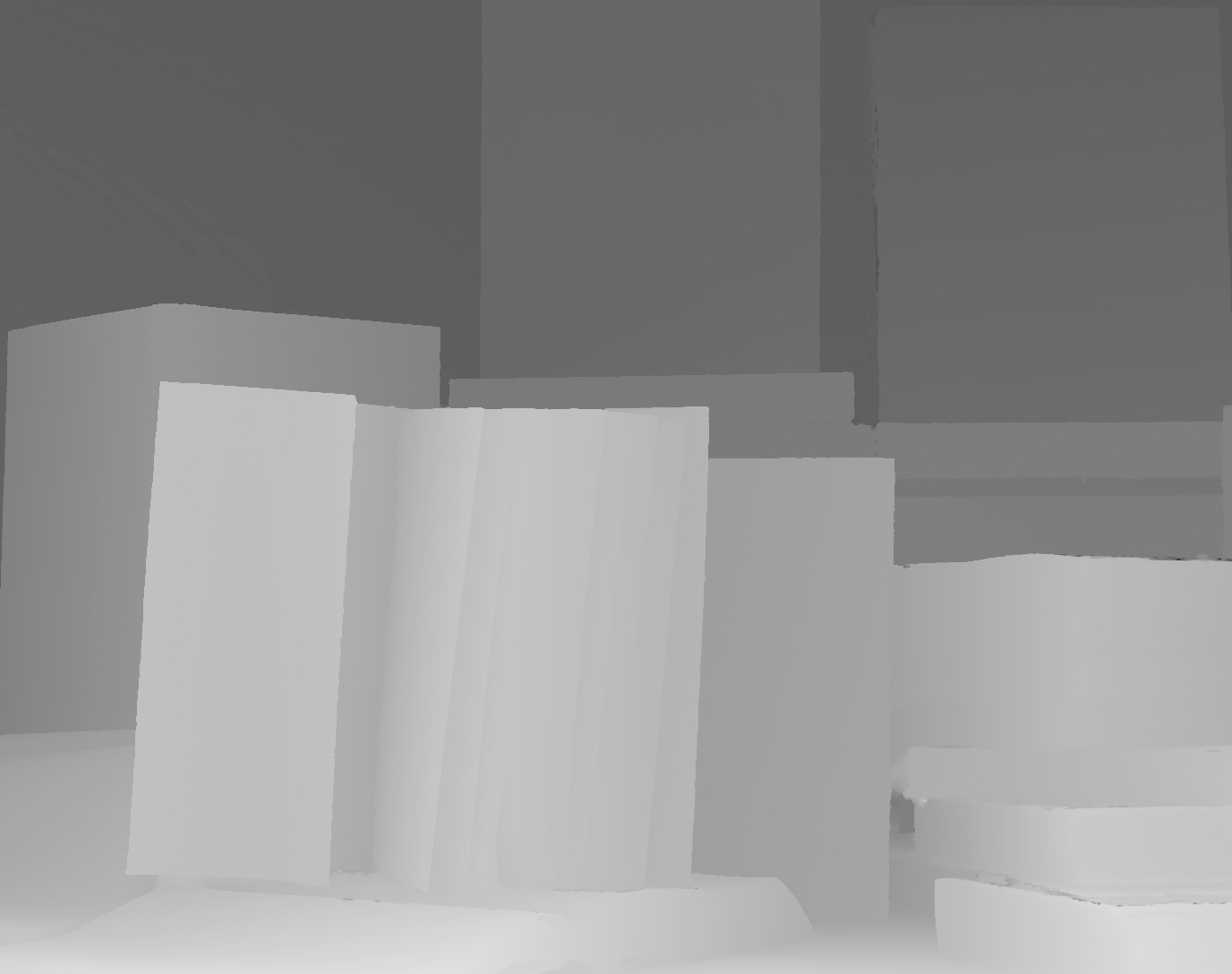

Visual evaluation of x4 upsampling on the Middlebury 2007 dataset. Images form left to right: low resolution depth input of size 172x136 with added noise, high resolution intensity image of size 1390x1110, upsampling result after Image Guided Depth Upsampling of size 1390x1110, groundtruth depth image.

IMPORTANT: All errors in the table are given as Mean Absolute Error (MAE). Not as RMSE as reported in the paper!!

IMPORTANT: All errors in the table are given as Mean Absolute Error (MAE). Not as RMSE as reported in the paper!!

The RMSE results can be found in the table below. Qualitative Middlebury evaluation measured as Root Mean Squared Error (RMSE).

[1] Q. Yang, R. Yang, J. Davis, and D. Nister. Spatial-depth super resolution for range images. In Proc. CVPR, 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] J. Diebel and S. Thrun. An application of markov random fields to range sensing. In Proc. NIPS, 2006.

[4] D. Chan, H. Buisman, C. Theobalt, and S. Thrun. A Noise-Aware Filter for Real-Time Depth Upsampling. In Proc. ECCV Workshops, 2008.

[5] J. Park, H. Kim, Y.-W. Tai, M. Brown, and I. Kweon. High quality depth map upsampling for 3d-tof cameras. In Proc. ICCV, 2011.

[6] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Qualitative Middlebury evaluation measured as Root Mean Squared Error (RMSE).

[1] Q. Yang, R. Yang, J. Davis, and D. Nister. Spatial-depth super resolution for range images. In Proc. CVPR, 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] J. Diebel and S. Thrun. An application of markov random fields to range sensing. In Proc. NIPS, 2006.

[4] D. Chan, H. Buisman, C. Theobalt, and S. Thrun. A Noise-Aware Filter for Real-Time Depth Upsampling. In Proc. ECCV Workshops, 2008.

[5] J. Park, H. Kim, Y.-W. Tai, M. Brown, and I. Kweon. High quality depth map upsampling for 3d-tof cameras. In Proc. ICCV, 2011.

[6] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Visual evaluation of approximately x6.25 upsampling on our novel real-world dataset available for download at the ToFMark depth upsampling evaluation page. Images form left to right: low resolution depth input image from a PMD Nano Time-of-Flight camera of size 160x120 with added noise, high resolution intensity image of size 810x610, upsampling result after Image Guided Depth Upsampling of size 810x610, groundtruth depth image acquired by a high accuracy structured light scanner.

[1] J. Kopf, M. F. Cohen, D. Lischinski, and M. Uyttendaele. Joint bilateral upsampling. ACM Transactions on Graphics, 26(3), 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Image Guided Depth Upsampling using Anisotropic Total Generalized Variation [bib]

[1] J. Kopf, M. F. Cohen, D. Lischinski, and M. Uyttendaele. Joint bilateral upsampling. ACM Transactions on Graphics, 26(3), 2007.

[2] K. He, J. Sun, and X. Tang. Guided image filtering. In Proc. ECCV, 2010.

[3] D. Ferstl, C. Reinbacher, R. Ranftl, M. Ruether, and H. Bischof . Image Guided Depth Upsampling using Anisotropic Total Generalized Variation. In Proc. ICCV, 2013.

Image Guided Depth Upsampling using Anisotropic Total Generalized Variation [bib]

Contact: Christian Reinbacher, Matthias Rüther

|  |  |  |

|---|

Synthetic Data

|  |  |  |

|  |  |  |

|  |  |  |

The RMSE results can be found in the table below.

Evaluation on TofMark Dataset

|  |  |  |

|  |  |  |

|  |  |  |

Contact: Christian Reinbacher, Matthias Rüther

Robot Vision

News

2016/12/16: New Open Student Position: LIDAR Metrology Tooling

--> Learn More2016/12/01: New Open Student Position: Robotic Charging of Electric Vehicles

--> Learn More2016/07/15: Accepted to BMVC 2016

Our paper "A Deep Primal-Dual Network for Guided Depth Super-Resolution" has been accepted for oral presentation at the British Machine Vision Conference 2016 held at the University of York, United Kingdom.

2016/07/11: Accepted to ECCV 2016

Our paper "ATGV-Net: Accurate Depth Superresolution" has been accepted at the European Conference on Computer Vision 2016 in Amsterdam, The Netherlands.

2015/10/07: Accepted to ICCV 2015 Workshop: TASK-CV

Our paper "Anatomical landmark detection in medical applications driven by synthetic data" has been accepted at the IEEE International Conference on Computer Vision 2015 workshop on transferring and adapting source knowledge in computer vision.

2015/09/14: Camera calibration code online

The camera calibration toolbox accompanying our paper "Learning Depth Calibration of Time-of-Flight Cameras" is available here.

2015/09/07: Accepted to ICCV 2015

Our papers "Variational Depth Superresolution using Example-Based Edge Representations" and "Conditioned Regression Models for Non-Blind Single Image Super-Resolution" have been accepted at the IEEE International Conference on Computer Vision 2015, December 13-16, Santiago, Chile.

2015/07/03: Accepted to BMVC 2015

Our papers "Depth Restoration via Joint Training of a Global Regression Model and CNNs" and "Learning Depth Calibration of Time-of-Flight Cameras" have been accepted as a poster presentation at the 26th British Machine Vision Conference, September 7-10, Swansea, United Kingdom.