User’s Flying Organizer (UFO) - Semi-Autonomous Aerial Vehicles for Augmented Reality

| Duration | 2015 - 2018 |

| Sponsors | Austrian Science Fund (FWF) |

| Project-Members | Werner Alexander Isop, Okan Erat |

| Project-Lead | Prof. Dr. Dieter Schmalstieg |

| Keywords |

|

| Abstract | As Augmented Reality (AR) presents information registered to any 3D environment, considering that it commonly relies on Head Mounted Displays (HMD's) or handheld devices, spatial AR is an unencumbered alternative. It considers visual projection techniques, which are able to augment surfaces directly in the environment. However, until now projectors have been stationary and were not able to cover large areas with augmentation, even if extended by swiveling platforms. Project UFO tries to address this problem throughout combination of spatial AR with the field of robotics. Specifically, by focusing on mobile robotics, human machine interaction and spatial augmented reality, the project aims for an entirely novel user AR experience in which a small semi-autonomous MAV with on-board mounted projection devices is able to create personal projection screens on arbitrary environmental surfaces. |

| Publications |

|

Project Overview (2015)

Project Work

During the first year the main focus of the project was to design and deploy an autonomous MAV flight system for indoor environments. Tasks included were the setup of a net-protected indoor flying space, the so called droneSpace, tracked by a highly accurate Optitrack Motion Tracking System and design/deployment of a MAV prototype, based on a commercial Parrot AR Drone 2.0 platform (Fig. 1). Accordingly, a ROS-based control framework was developed from scratch. The prototype was equipped with a custom-built lightweight and small-sized laser projection system for projecting instructional imagery into the 3D environment. A first evaluation of the MAV flight system's capabilities and visual/perceptional quality of the projected patterns was conducted at the end of the year.

Fig. 1: First lightweight MAV prototype, designed for indoor flight in narrow environments during the UFO project.

Teaching/Supervision

In a first step the autonomous MAV flight system was utilized for the practical of the Virtual Reality Lecture during summer term 2015. Students were focusing on design and implementation of a smartphone-based Virtual Reality interface (Google Cardboard) to navigate the MAV indoors. The students head orientation was tracked by the inertial sensors of the smartphone and in a next step sent to the MAV as velocity commands. The practicals of this terms lecture, administered by Prof. Dieter Schmalstieg, were assisted by Peter Mohr-Ziak and Werner Alexander Isop.

Fig. 2: Photos taken during the Virtual Reality Lecture (VRVU) in Summer Term 2015

Project Overview (2016)

Conference Contribution - Micro Aerial Projector

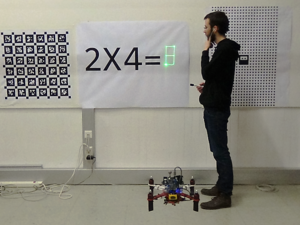

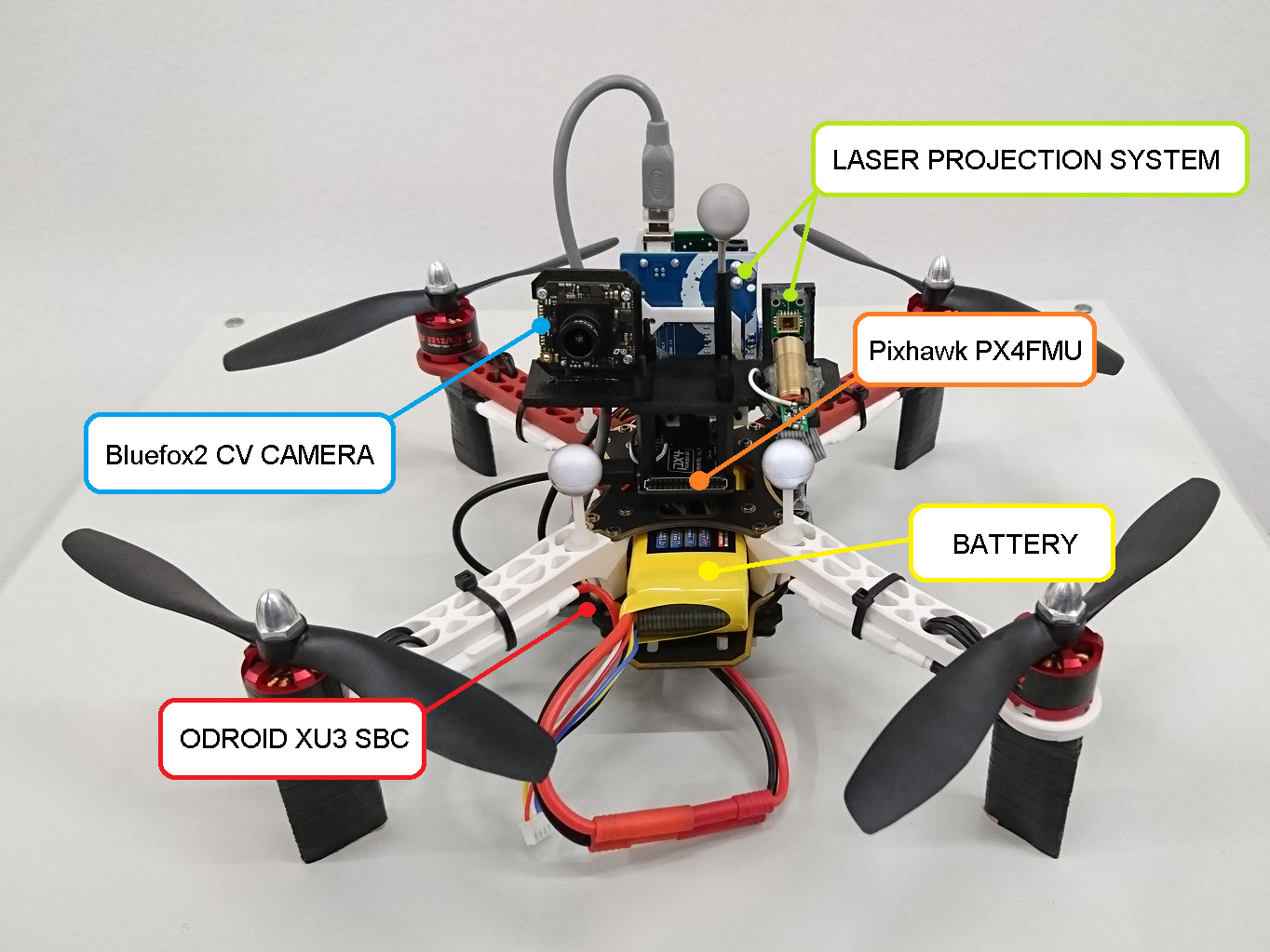

We, the UFO-Team, are happy to announce that our work on the Micro Aerial Projector (MAP) got accepted as IEEE paper under the supervision of Prof. Dieter Schmalstieg and Ass.Prof. Friedrich Fraundorfer. Based on the previous years work the autonomous MAV flight system, including a custom-built small sized projector and the according ROS-based control framework, was constantly improved at the droneSpace. The latest MAV prototype builds on the more capable DJI F330 frame. In addition, a brighter projection system and a monocular camera was added. In collaboration with the Advanced Interactive Technology Lab (AIT) at ETH-Zürich, a PX4 Pixhawk was utilized for low-level flight control of the MAV. Tackling the problems of achieving an accurate stabilization of projected images and sensing visual information during flight, the goal was to demonstrate the capabilities of the MAP for human machine interaction use-cases. The MAP acted as an autonomous teaching assistant, supporting a student with solving an equation (Fig. 3). The Micro Aerial Projector was presented at the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems by the first author Werner Alexander Isop at Deajeon, South Korea in October 2016.

Fig. 3: The Micro Aerial Projector supporting a student in solving an equation (Left) and the MAV Prototype (Right).

The authors also wish to thank the Bachelor students David Fröhlich and Sebastian Reicher for their support.

Project Collaborations

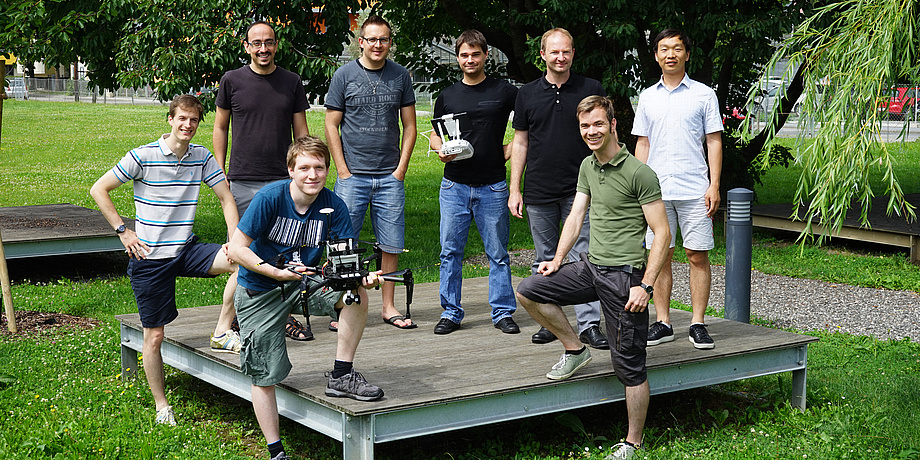

Together with the Aerial Vision Group a participation at the international drone challenge, the DJI Challenge 2016, was initiated under supervision of Ass.Prof. Friedrich Fraundorfer. As part of a rescue scenario, the fields which were addressed included mobile robotics, path-planning and navigation, reconstruction and user-interface design. The team was able to compete against more than 100 teams world wide and further reached the finals which were taking place at Rome, New York in August 2016. The team members (Fig. 4, from left to right) included Daniel Muschik (Institute of Automation and Control), Jesus Pestana, Felix Egger, Manuel Hofer, Werner Alexander Isop, Ass.Prof. Friedrich Fraundorfer, Michael Maurer and Guan Banglei.

Fig. 4: The TU-Graz team which participated at the DJI Challenge finals

Teaching and Supervision

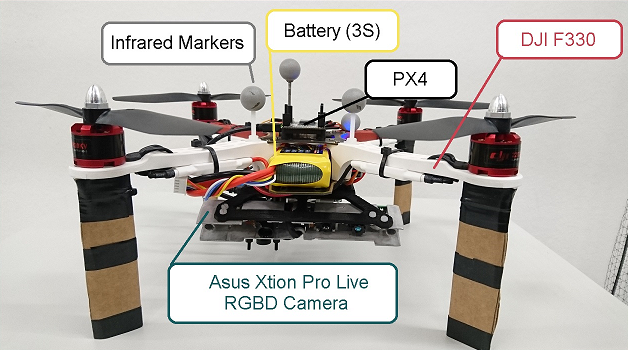

Fig. 5: MAV prototype for semi-autonomous 3D reconstrution.

Based on the autonomous MAV flight system of the Micro Aerial Projector, a RGBD-Sensor equipped MAV prototype (Fig. 5) was designed to semi-autonomously reconstruct 3D objects during flight. The work was done as part of a Bachelor-Thesis in winter term 2016 (David Fröhlich, "3D-Object Reconstruction Using A Camera Drone With RGBD-Sensor", Institute of Computer Graphics and Vision, 2016. 1st Supervisor: Friedrich Fraundorfer 2nd Supervisor: Werner Alexander Isop).

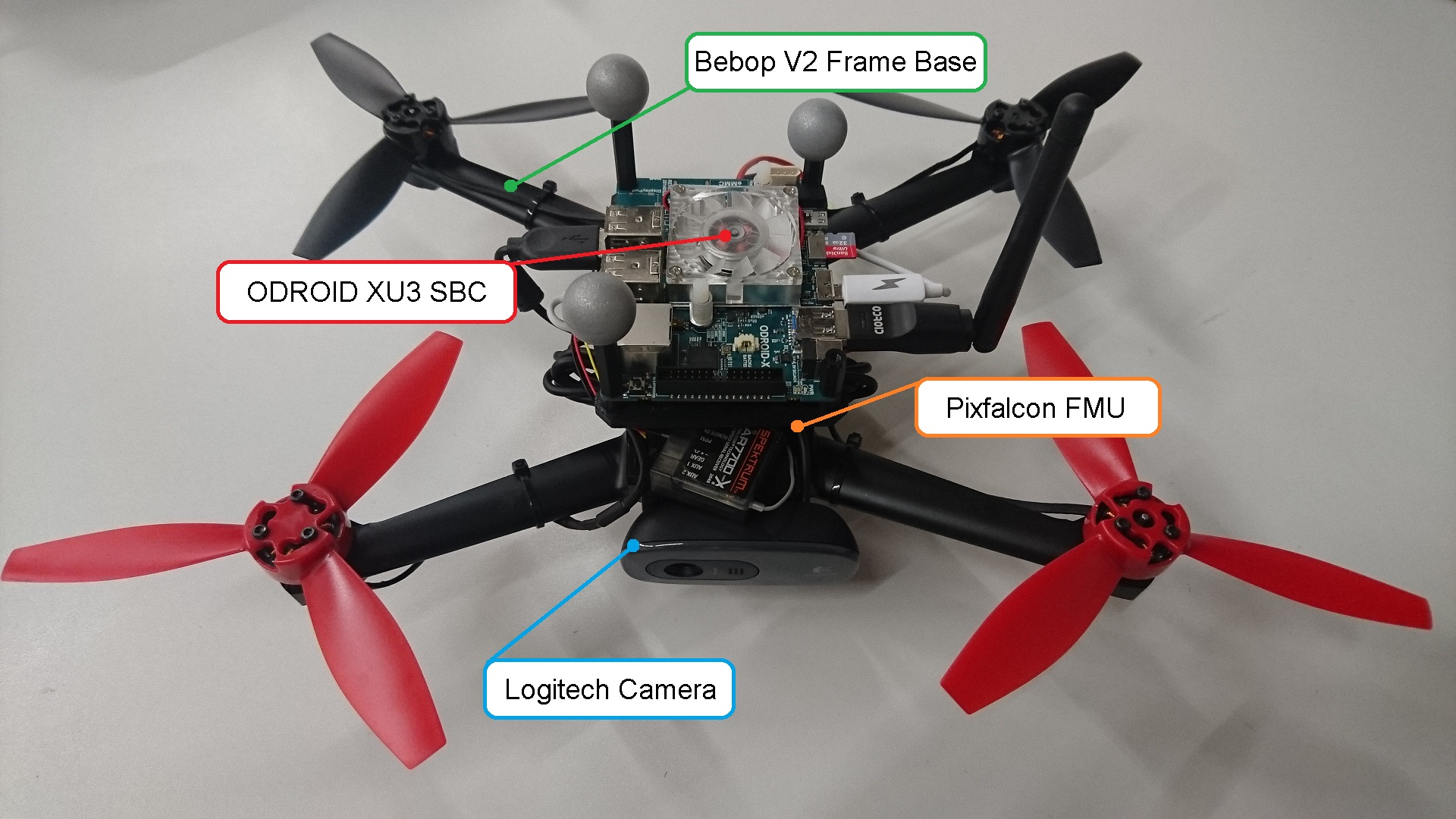

Framework Upgrade for educational Purposes and future Work on Mixed-Reality Teleoperation Interfaces

During winter term 2016/2017 a further improved version of the autonomous MAV flight system (MAV prototype and ROS-based control framework) was introduced by Werner Alexander Isop as part of the Camera Drones Lecture in the droneSpace. Changes included an upgrade of the ROS framework from "Indigo" to the more recent "Kinetic" version, extensive parametrization and clean-up of the individual ROS-nodes. In addition, the MAV prototype (Fig. 6) received a more lightweight frame based on the Parrot Bebop 2. Further, the outdated PX4FMU flight controller was replaced by a Pixfalcon Flight Management Unit with updated Firmware.

The autonomous MAV flight system will be also utilized for future project work in 2017. It is planned to combine the system with a Mixed-Reality Smart-Glass for exploration of hidden, narrow environments.

Fig. 6: Third and final prototype, as part of the autonomous MAV flight system, for exploration of narrow environments.

Project Overview (2017/2018)

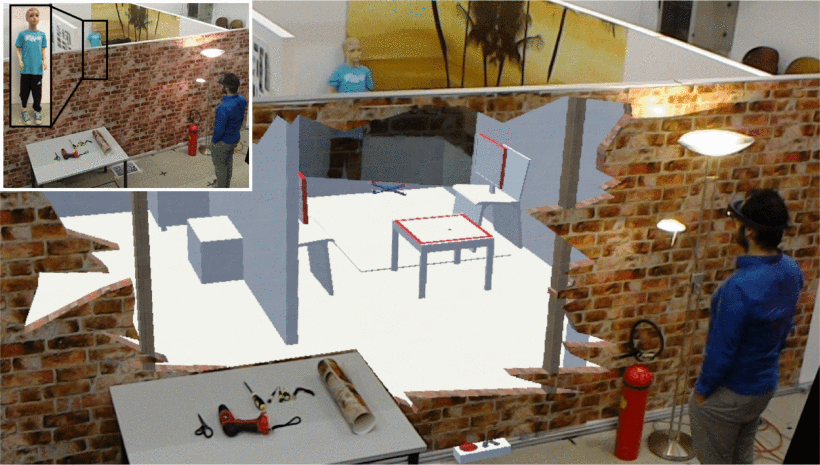

Conference Contribution - Drone Augmented Human Vision

We, the UFO-Team, are happy to announce that our work on the Drone Augmented Human Vision got accepted as IEEE paper under the supervision of Prof. Dieter Schmalstieg and Ass.Prof. Denis Kalkofen. It was presented by the first author Okan Erat at the IEEE Conference on Virtual Reality and 3D User Interfaces which took place in Reutlingen, Germany in March 2018. The focus is on a mixed-reality interface, providing X-Ray vision (Fig. 7) with Microsoft HoloLens, combined with a modified version of the autonomous MAV flight system, developed by Werner Alexander Isop.

Fig. 7: The human operator using drone augmented human vision to explore hidden areas.

The autonomous MAV flight system was complemented with a more lightweight prototype based on the Parrot Bebop 2 (Fig. 8). Accordingly, an updated version of the ROS-based control framework was implemented (Fig. 9). Especially designed to overcome typical problems which arise during flight in narrow indoor environments it was successfully used for other IEEE conference contributions like the Micro Aerial Projector (MAP). It was moreover utilized for educational purposes as part of the Camera Drones Lecture administered by Ass.Prof. Friedrich Fraundofer.

Fig. 8: Custom MAV platform designed for exploration of narrow indoor environments.

Fig. 9: Overview of the MAV flight system, interfaced by the Mixed Reality HMD (Microsoft HoloLens).

For more details about the contribution also refer to the following articles which got published in the news recently:

- What Do You Get When You Cross A Drone With A HoloLens – XRay Vision.

- See Straight Through Walls by Augmenting Your Eyeballs With Drones

The authors also wish to thank the Bachelor students David Fröhlich and Sebastian Reicher for their support during the whole project.