Large Scale Metric Learning from Equivalence Constraints

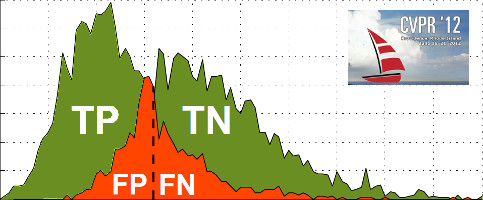

We provide the code and data to reproduce all experiments of our CVPR'12 paper Large Scale Metric Learning from Equivalence Constraints. In this paper, we raise important issues on scalability and the required degree of supervision of existing Mahalanobis metric learning methods. Often rather tedious optimization procedures are applied that become computationally intractable on a large scale. With our Keep It Simple and Straightforward MEtric (KISSME) we introduce a simple though effective strategy to learn a distance metric from equivalence constraints. Our method is orders of magnitudes faster than comparable methods.

Citation

If you use this code, please cite our paper:

In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2012

BibTeX reference for convenience:

author = {Martin K\"{o}stinger and Martin Hirzer and Paul Wohlhart and Peter M. Roth and Horst Bischof},

title = {{Large Scale Metric Learning from Equivalence Constraints}},

booktitle = {{Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}},

year = {2012}

}

Datasets

Documentation

The best thing is to check out the inline documentation of the MATLAB files. For all experiments a main file (LFW, ToyCars, VIPeR, PubFig) describes the used features and database. Our method is described in LearnAlgoKISSME.m, in particular in the learnPairWise method.

In order to run the experiments presented in the paper, we assume basic knowlege of Matlab. The code is tested under Linux (x64) and Windows (x32,x64), should also work on other platforms with minor modifications. Reproducing the results of our method should not take more than five minutes, including installation.

Grabbing the Code & Data

To download and unpack the needed files run the following code snipet in Matlab. Alternatively, click the links; download and unpack the archives to a directory of your choice.

fnc = websave('kissme.zip','https://cloud.tugraz.at/index.php/s/QafSNyaB9Di5KAp'); % 0.03 MB

fnf = websave('kissme_features_basic.zip', 'https://cloud.tugraz.at/index.php/s/mdmwNGmPDzLHoCC'); % 55 MB

unzip(fnc, BASE_DIR); % Extract code

unzip(fnf, BASE_DIR); % Extract basic features

The provided features cover everything that is needed to reproduce the experiments. If you want to use the original extracted features (before PCA compression) download the kissme_features_full.zip (890 MB) archive.

Installing other competing metric learning methods

The experiments described in the paper benchmark our method (KISSME) to other metric learning methods (LMNN, ITML, LDML, SVMs). Due to different licenses these are not pre-installed by default. If you agree to these install the code with the following matlab snipet.

Quick Start Running Experiments

Change the directory to KISSME, workflows, CVPR.

Pick the experiment of your choice and run the according script, e.g. demo_viper.m.

To run all experiments

run(fullfile(cd,'demo_toycars.m'));

run(fullfile(cd,'demo_viper.m'));

% PubFig, LFW

run(fullfile(cd,'demo_pubfig.m'));

run(fullfile(cd,'demo_lfw_sift.m'));

run(fullfile(cd,'demo_lfw_attributes.m'));

Note: For LFW and PubFig only KISSME is enabled per default. For some of the other algorithms it takes quite long to complete. If you want to train all installed learning algorithms uncomment the respective code. Check the inline comments for details, e.g. in demo_lfw_sift.m.

License

The toolbox code is licensed under the BSD 3-Clause License ("modified BSD license"). If you use the code, i.e. our algorithm in a scientific publication please cite this paper. For the provided data please check the included copyright notice as it is partly based on other data.

- Team

- Research

- Open Student Projects

- Publications

- Completed Theses

- Downloads