Keypoint Transformer: Solving Joint Identification in Challenging Hands and Object Interactions for Accurate 3D Pose Estimation

Abstract

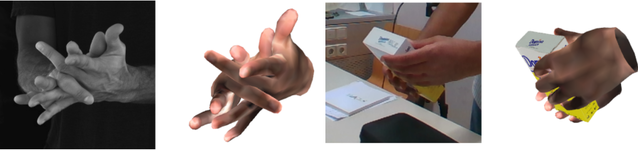

We propose a robust and accurate method for estimating the 3D poses of two hands in close interaction from a single color image. This is a very challenging problem, as large occlusions and many confusions between the joints may happen. State-of-the-art methods solve this problem by regressing a heatmap for each joint, which requires solving two problems simultaneously: localizing the joints and recognizing them. In this work, we propose to separate these tasks by relying on a CNN to first localize joints as 2D keypoints, and on self-attention between the CNN features at these keypoints to associate them with the corresponding hand joint. The resulting architecture, which we call “Keypoint Transformer”, is highly efficient as it achieves state-of-the-art performance with roughly half the number of model parameters on the InterHand2.6M dataset. We also show it can be easily extended to estimate the 3D pose of an object manipulated by one or two hands with high performance. Moreover, we created a new dataset of more than 75,000 images of two hands manipulating an object fully annotated in 3D and will make it publicly available.

Dataset

H2O-3D is a dataset with 3D pose annotations for two-hands and object during interaction. The annotatations are automatically objtained using the optimization method proposed in HOnnotate. The 17 multi-view (85 single-camera) sequences in the dataset contain 5 different persons manipulating 10 different objects, which are taken from YCB objects dataset. The dataset currently contains annotations for 76,340 images which are split into 60,998 training images (from 69 single-camera sequences) and 15,342 evaluation images (from 16 single-camera sequences). The evaluation sequences are carefully selected to address the following scenarios:- Seen object and seen hand: Sequences MBC0, SB4, SHP32, SSB1, SSC4 and SSPD3 contain hands and objects which are also used in the training set.

- Unseen object and seen hand: Sequences SHSB0, SHSB1, SHSB2, SHSB3 and SHSB4 contain 004_sugar_box object which is not used in the training set.

- Seen object and unseen hand: Sequences MEM0, MEM1, MEM2, MEM3 and MEM4 contain a subject with different hand shape and color and is not part of the training set.