* This work is funded by the Christian Doppler Laboratory for Semantic 3D Computer Vision.

Accurate geo-localization in urban environments is important for many Augmented Reality and Autonomous Driving applications. Sensors like GPS, compass and accelerometer are accurate enough for scenarios like navigation but they are not accurate enough for applications if knowing accurate 6DoF camera pose is significant.

Standard registration methods based on image matching quickly become impractical, as many images need to be captured and registered in advance. Even collections such as GoogleStreetView are rather sparsely sampled and exhibit only specific illumination and season conditions, making image matching challenging.

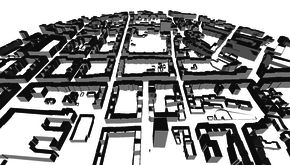

We propose methods for accurate camera pose estimation in urban environments from single images and

2.5D maps made of the surrounding buildings’ outlines and their heights. Our approaches bridges the gap between learning-based approaches and geometric approaches for geo-localization by using recent advances in semantic image segmentation.

|  |

|---|

Given a single color image frame and a coarse sensor pose prior, we first extract the semantic information from the image, and then look for the orientation and location that align a 3D rendering of the map with these segments. In our case, useful classes are façades, vertical edges and horizontal edges of the buildings.

Optimization of the pose with the best alignment of 3D rendering and the segments can be either done by random sampling of the poses or in a novel efficient and robust method: We train a Deep Network to predict the best direction to improve a pose estimate, given a semantic segmentation of the input image and a rendering of the buildings from this estimate. We then iteratively apply this CNN until converging to a good pose.

This approach avoids the use of reference images of the surroundings, which are difficult to acquire and match, while 3D models appear to be broadly available, and converges efficiently even when initialized far away from the correct pose.

We evaluated our approach on 40 test images. Our results show significant improvement on the sensors' estimations. We provide plots to compare errors made by our approach and the sensors' estimations. In the following plots orientation and position errors wrt. ground truth pose for each image are provided. For more details please refer the

paper.