Menü

Augmented Reality

| Augmented reality (along with the related topics of mixed reality and virtual reality) has been the primary research field of the team for many years. The team has made many seminal contributions in this area, covering the entire scope of augmented reality topics, including visualization, rendering, spatial interaction, authoring, mobile tracking, software architectures and applications. | |

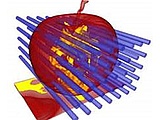

| Situated visualization When consuming information using augmented reality, the user’s perception is necessarily affected by the situated nature of the presentation, which is – per definition – related to the real environment. This form of visualization is frequently called situated visualization, and it is different from visualization on conventional (desktop) displays since it must try to strike a compromise between virtual and real elements in the user’s field of view. Two very important topics that we have investigated in this context are: first, the placement of virtual labels and other overlays in the user’s field of view such that clutter and undesired occlusions are minimized, and, second, X-ray visualization revealing occluded information by simulated transparency effects on occluding real objects. |

| Coherent rendering In application areas such as entertainment or immersive shopping, the capability to graphically render mixed reality scenes such that the virtual elements are indistinguishable from the real world is highly desirable. Such a consistent rendering is only possible if the photometric properties of the real world are known. In particular, we need information on the incident lighting and the material properties of the objects in the scene. This is challenging, as both are, in general, not known. Objects can move and light sources can change. We have worked on methods to recover photometric properties and compute approximate global illumination robustly, in real time and from a minimum of observations. |

| Spatial interaction In contrast to desktop computing, where interaction is typically constrained to keyboard and mouse, augmented reality interface afford a variety of form factors. Recently, affordable augmented reality head sets are becoming available. However, since the early 2000s, handheld devices such as smartphones were the only widely available form-factor for deploying mobile augmented reality, and will continue to be pervasive in the foreseeable future. While a lot of work on next generation user interfaces remains only on a conceptual (mock-up) level, we have a reputation for explored interface designs by providing operational technical implementations. This work has spanned such diverse subjects as transparent devices, conversational agents, navigation and multi-device interfaces. |

| Mixed reality authoring Once mature platform become widespread, augmented reality will be perceived as a new type of medium, alongside movies, TV, mobile games and so on. While content creation for entertainment benefits from an economy of scale (a particular movie or games can amortize large production costs by reaching a large audience), content creation for professional applications, such as navigation or repair instructions requires a larger degree of automation to be feasible. Dr. Schmalstieg and his team have investigated approaches that enable and author to leverage existing data sources, such as CAD, product databases, or even printed manuals and Youtube videos, in the creation of augmented reality content. |

| Tracking: The one key enabling technology for mobile augmented reality is real-time 3D tracking. Without such tracking, proper registration of virtual and real is impossible, and augmented reality applications are limited to placing “floating labels” in the general area of a point of interest. The challenge of mobile tracking lies of course in finding efficient algorithms that determine the 3D pose of a mobile device fast and robustly. Dr. Schmalstieg and his team have delivered seminal work on mobile tracking, starting with the first fully autonomous implementations of marker tracking (2003) and natural feature tracking (2007) on the commercial devices of the time. The findings on the efficiency of optical tracking methods were widely adopted. Some of the methods were acquired by Qualcomm and used in the Vuforia software product, which is a market leader today. Later research work in the group of Dr. Schmalstieg investigate the even more challenging problem of outdoor tracking “in the wild”, where the high cost of data collection and rapid visual changes make conventional model-based approaches infeasible. |

| Collaborative augmented reality Since in the late 1990s, we have explored the potential of augmented reality for computer-supported collaborative work. The results of this research endeavor was the first augmented reality system for co-located collaborative, called Studierstube. Before the arrival of commodity techniques such as WiFi or multiplayer game engines, designing and building a software architecture that combined diverse hardware (tracking, head-mounted display), real-time display and distributed multi-user simulation with low latency, was a major challenge. Consequently, the highly cited works that were published as a result of this project equally contribute to computer-supported collaborative work and to software engineering. |

Visualization

|

| Visualization is an essential element of visual computing. This is true for augmented and virtual reality, but even more for conventional interactive systems that work with big data and need to provide a comprehensive user interface to an information worker. Big and complex data sets are produced by many application areas, but particularly interesting source come from the life science. |

|

| Medical visualization In medicine, visualization is most often used in combination with volumetric imaging sources. Medical visualization can support diagnosis, planning of surgeries or minimaly invasive treatment, and intra-operative navigation. Volumetric visualization is particularly challenging because it must handle huge datasets in real time. We have investigated visualization tools for a number of clinical applications, including liver surgery, tumor ablation, forensic analysis and cranial surgery. |

| Information visualization and display ecologies When visualization systems encompass large amounts of heterogeneous data, the most common approach is to present multiple views or windows. In particular when using very large displays, the user can have difficulties to identify corresponding pieces of information. Visual links provide an attractive visual aid to link and connect the pieces. Dr. Schmalstieg and his team have investigated various efficient and effective ways to add visual links as a feature to existing desktop systems in a minimally invasive way that lends itself to everyday use. |

Real-time graphics

|

| Real-time graphics are not only used for games and virtual reality, but increasingly also for simulation and visualization. The GPU architecture with thousands of computing cores lends itself to the development of highly parallel algorithms. We work on new parallel programming techniques for the GPU and on applications in graphics and procedural modeling. |

| GPU Techniques We have worked on advanced parallel programming techniques on the GPU. A particular area of interest lies on algorithms, which do not have parallel workloads immediately available up-front, but require dynamic scheduling. Examples for such algorithms are sparse matrix operations, deep tree traversals or adaptive rendering techniques. Dynamic scheduling also lends itself to enforcing priorities and carefully balancing producer-consumer problems. |

| Procedural modeling is essential in applications which require very large geometry sets at a level of detail which cannot be created by hand. For example, detailed city models can contain hundreds of thousands of buildings and billions of polygons. Such large models may not even fit into the available memory. We have developed the first approach to evaluate procedural models on the fly, thereby supporting literally infinitely detailed models. |

| Virtual reality rendering Hhigh quality rendering for virtual reality applications requires minimizing perceived latency. We introduce a novel object-space rendering framework which decouples shading from rendering. The shading information is temporally coherent and can be efficiently streamed over a network. Our method also lends itself to exploiting spatio-temporal coherence, for example, through foveated or adaptive rendering. |

Accept all cookies

Save preferences

Cookie-Categories:

We use cookies in order to be able to provide you with the best possible service in the future. In the privacy policy you will find further information as well as the possibility of withdrawal.